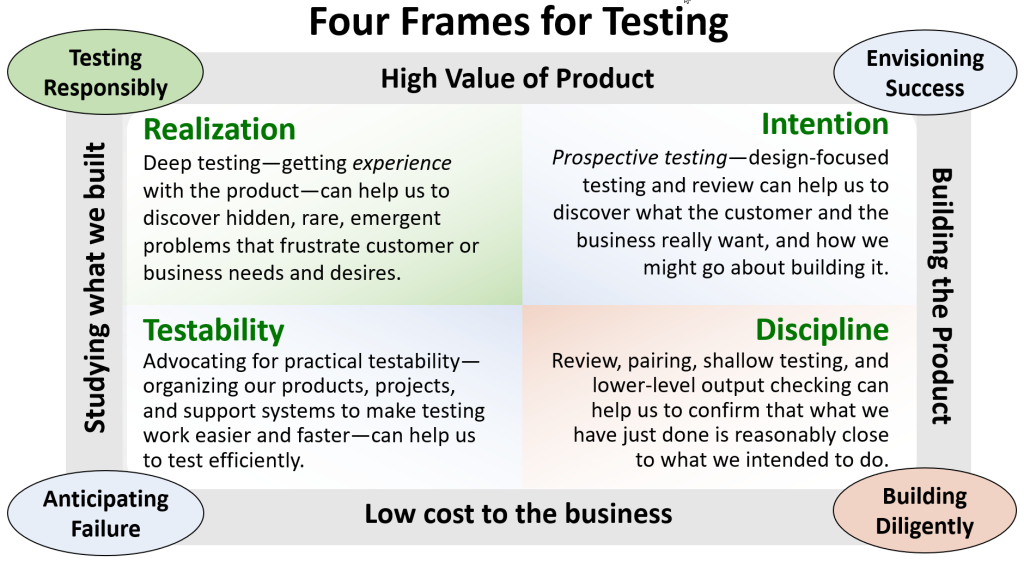

In the last post, I introduced four frames for testing, each of which might present a set of ideas for covering a product with testing at various points through its development. On the way to a complete package, system, or service, people produce many different ideas and artifacts, each of which can be tested. Moreover, people with different interests, temperaments, and roles in the development process perceive testing in different ways. Although the frames are arranged in a table that might seem to run clockwise, the frames are not necessarily sequential — about which more later.

The frames that I introduced are labelled Intention, Discipline, Testability, and Realization.

Let’s unpack these one by one.

Intention

Design is about developing ideas and intentions. As we develop them, we can test them. Intention-framed testing is focused on challenging our ideas about what the product could and should be, so that we can identify and address design problems before we begin to build the product.

It can be hard for us to get clear on what we want before we’ve built something. Different people have different purposes for a product, and find different aspects of a product valuable.

Most software is produced for more customers than we can ever hope to meet, which requires us to model the customer space. To do that well, we must engage with and consider a requisite variety of customers and customer roles to gauge the scope of their needs and desires.

Of course, customers aren’t the only people worth considering in our designs. Software must be tested, supported, maintained, documented, marketed, sold, and managed. Every person involved with any of those things may have specific needs and desires for the product, and some of those may conflict with each other.

Coming to terms with conflicting needs and desires can require tradeoffs in design and implementation. The Intention frame is a good place to start shining like on those things and sorting them out. As part of the design process, it might also be worthwhile to develop ideas on what it might take to declare that the product is complete.

So, as we’re optimistically envisioning success and setting out to build a product, we’re developing the design. As we do so, we perform testing and review focused on our notions of the requirements, acceptance criteria, designs, specifications, mockups, wireframes, plans — and the documents other and artifacts that represent our ideas. That is: testing relevant to developing and refining our intentions, so that we can go about building the product efficiently.

Testing work in this frame might include discussions, thought experiments, and analysis of ideas, prototypes, or drafts. Behaviour Driven Development (BDD) and Acceptance Test Driven Development (ATDD), the Three Amigos approach (first described by my friend George Dinwiddie), and “knowledge crunching” (as described in Eric Evans’ Domain-Driven Design) include testers and testing as intrinsic to developing and refining intention. When they’re performed at their best, these activities include prospective testing — at least one person deliberately taking the role of critic, asking questions like:

- What are we building?

- Who are we building it for?

- What do they want from it?

- What could go wrong?

- How would we know?

- What are we missing? and, for each question above,

- Is that all?

“What could go wrong?” and “How would we know?”, in particular, can help to prompt the team to consider intrinsic and project-related testability (about which more later) in the design.

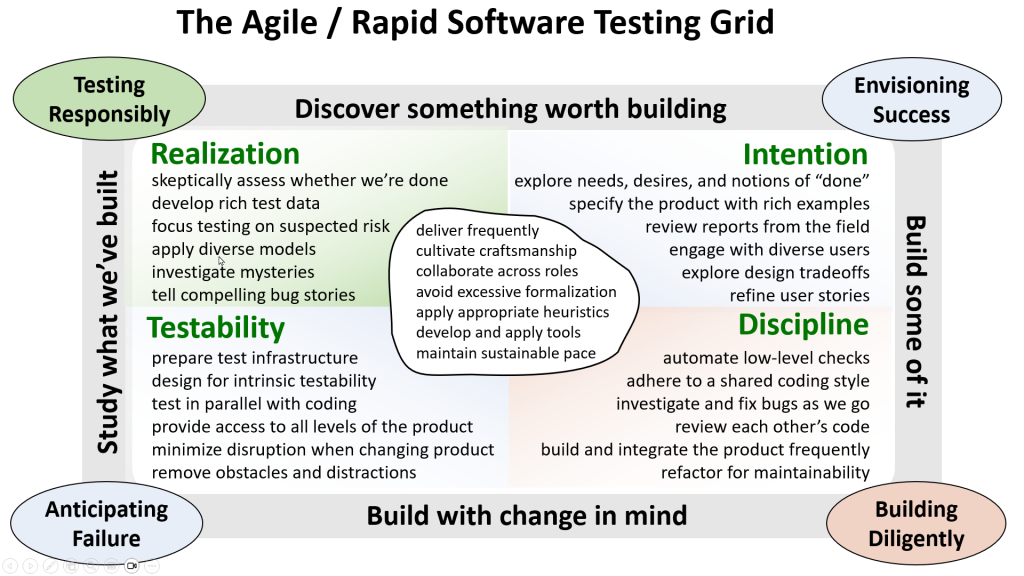

In the Intention frame, among other things, we

- explore needs, desires, and notions of “done”

- specify the product with rich examples

- review reports from the field

- engage with diverse users

- explore design tradeoffs

- refine user stories

Notice that this kind of work is fundamentally exploratory! It might be supplemented by checklists, but there’s no script for it.

Everyone on the team may participate in Intention-framed testing. Many quality coaches, so it seems, are particularly enthusiastic about developing and refining intentions to help us build the right thing, to avoid building the wrong thing, and to help prevent bugs from getting into the product. It’s a really good idea to specify our intentions clearly, completely, and accurately. As Cem Kaner said (in 1993!), a program that is built perfectly to a lousy spec is a lousy program, not a perfect program. The motivation for Intention-framed testing is to save time and effort by detecting problems as early as possible —”shifting testing left” along the development timeline.

Discipline

Having developed the design, we set about building the product. As we do so, we want to keep the value of the work high and and the cost of change low by building with diligence and discipline.

Even after our intentions are clear, there’s still a risk that we might be committing errors. Human beings, whether as individuals or in groups, don’t always do exactly what we intended to do. Even the most capable developers are vulnerable to occasional mistakes, misunderstandings, and oversights when they’re writing new code. Change poses the risk of missteps, too. Those problems tend to cost time and money.

We can’t avoid every problem, but we can avoid a lot of the more obvious ones by building diligently; carefully, cleanly, simply. We can be alert to coding errors quickly if we review and test as we go. So, as disciplined, diligent developers are writing and refining the code, they perform testing focused on very fast and useful feedback, to address avoidable and relatively easy-to-detect errors.

Some of that testing work might be informal: write a bit of code, run it, and see what happens. Developers might perform experiments with units and components, often using mocks or simulators or “test doubles”, rather interacting with the entire product.

Some of that development and testing work might be more formalized. Test-driven development (TDD) suggests that before writing new code, developer write unit checks. At first, each check is intended to return failure, as it should when the code that would make the check return success hasn’t been written yet. When that code is written, the developer runs the check and the checks that have been developed and run successfully before. When the code doesn’t pass one of the checks, the developer gets almost instant feedback that something is wrong, and can fix the error efficiently.

Some of the development and testing work in the Discipline might be collaborative: formal code review sessions, pairing (two heads are better than one), or “community” or “ensemble” programming (much better names than “mob programming”).

Automated output checking, directed mostly at units or low-level integrations, and also directed at the risk of regression, is a big theme in the Discipline frame. Discipline-framed testing might involve contract testing or its more exploratory cousin, property-based testing. Output checking can be automated. The testing work that surrounds it — risk analysis and design of the checks before they’re run, and interpretation and evaluation of the outcomes afterwards — cannot be automated. Discipline-framed testing requires developers with skill and social competence.

Testing in the Discipline frame tends not to be particularly deep or challenging, nor focused on the whole system. That’s by design, and it’s a good thing. Testing in the Discipline frame tends to be quick, not intensive, and therefore not too disruptive to the developers’ forward progress. The goal isn’t to find every problem, but to affirm that we’re building something reasonably close to what we intended to build—and to alert us immediately when we’re not.

In the Discipline frame, among other things, we

- automate low-level checks

- adhere to a shared coding style

- investigate and fix bugs as we go

- review each other’s code

- build and integrate the product frequently

- refactor for maintainability

Testing in the Discipline frame — and talk about it — tends to be the focus of the programmers and builders of the product; insiders working very close to the coal face. To people in the eXtreme Programming (XP) movement back in the day, to TDD advocates, and to many members of the Agile community, this is what testing is all about: getting immediate feedback on code-level problems so that they can be addressed without delay and without being buried. All this is another aspect of “shifting testing left”.

Testability

The Testabilty frame is focused on getting things ready for efficient, deep testing; advocating and acting to increase practical testabilty.

A product tends to be more testable — and problems in it easier to find — when it includes features that allow for visibility (logging, monitoring, querying the product and its data) and controllability (interfaces for driving or reconfiguring the product). In Rapid Software Testing, these are aspects of intrinsic testability. Other aspects include simplicity, modularity, and code hygiene. Reporting the bugs in a buggy product takes time away from testing.

Practical testability is more than intrinsic testability, though. There are other dimensions of testability that make a differece and that influence each other.

When we already know a lot about a product, the technologies comprising it, and the domain it’s intended to support, there tends to be less of a gap between what we know and what we need to know for management to make informed decisions about it. Narrowing that gap is about increasing epistemic testability.

Testing is easier when the context supports it — when the entire team is in close colloboration, when project information is carefully curated and readily available, and when there’s easy access to equipment and tools to support development and testing work; project-related testbility.

Continuous integration and continuous deployment present andother aspect of project-related testability. It’s much easier to test a product when we can build it quickly and reliably. That prompts attention to build and deployment pipelines, including automated checks to detect errors and omissions algorithmically.

A product is also easier to test when the project team has access to the various clients of testing, and knowledge about what they want. That includes not only the product’s customers, but also anyone who might be interested in or affected by the product, including developers, designers managers, support folk, operations people,… Often these people have information, insight, or expertise that can help us to test more effectively and efficiently, the basis of value-related testability.

Testing is faster and easier when people have the required knowledge and skills to anticipate, seek, recognize, and report on problems and risks. This includes acquiring training, tools, resources, and people to support the testing work. We call this subjective testability.

Notice that the Testability frame doesn’t necessarily follow the Intention or Discipline frame, but is intertwingled with them, influencing people’s choices and actions associated with both. The key purpose of the Testability frame is to direct attention to things that allow us to be prepared for any deep testing that might necessary when we have finally have the built product.

So: in the Testability frame, among other things, we

- prepare test infrastructure

- design for intrinsic testability

- test in parallel with coding

- provide access to all levels of the product

- minimize disruption when changing product

- remove obstacles and distractions to testing

DevOps enthusiasts and people involved with setting up Continuous Integration and Continuous Delivery (CI/CD) have special interest in the kinds of testing and checking prompted by Testability framing — yet another instance of “shifting testing left”.

Realization

Until we have the built product, we’ve been working with prototypes of the built product, or components of the built product, or our imagination of the built product. The Realization frame is where things get real.

“Realization” is a pun. At last, we’ve realized the goal of building the product, and now there is at last a real product to test. As we test it, we might also realize that there are problems that have escaped everyone’s attention so far. Despite everyone’s best efforts all the way along, some bugs can be deeply hidden, subtle, infrequent, intermittent, condition-specific. Testing in the Realization frame is about finding those.

Past failure to recognize problems is not necessarily due to anyone’s lack of diligence. In any complex system, problems aren’t always immediately obvious. Software often depends on exactly the right thing happening at exactly the right time. Products work fine in some contexts and don’t work in others. A feature may fail when it encounters pathological data. Little variations in hardware platforms and operating systems can turn out to make big and damaging differences.

Above all, problems can be emergent. Sometimes problems in a product aren’t inherent in the bits and pieces, but in the interactions between them. Problems can emerge from interactions between well-tested components, or between a well-tested component and specific data. As messy as the test lab might be, the world outside is far messier. Problems can emerge when real people use the product in ways that we didn’t anticipate.

All this makes certain problems elusive. Even when we’ve been highly diligent and disciplined, problems may have escaped our notice and our attempts to prevent them. That’s at least in part because the builders of the have the builder’s mindset; the insider’s perspective rather than the outsider’s perspective. It’s also because the quick and simple nature of discipline-frame testing by design tends to avoid rich, complex, realistic scenarios. For that reason, once we’ve got the real product, and when there’s risk to the business, it’s a good idea to look hard for those deeper problems before inflicting them on customers.

Testing in the Realization frame is about experiencing, exploring, and experimenting with the built product in its entirety, to perform rich, complex, challenging tests, intended to maximize the chance of finding every important and elusive bug that might have been missed along the way. This includes deep probes of the internals of the system; exposing it to realistic scenarios; getting extensive experience with it; challenging it and our beliefs about it. Our goal here is to recognize problems that would frustrate the needs and desires of people using the product, or that could be costly to people who might be affected by the product.

People sometimes think of testing in the Realization frame as “manual testing that happens after all the automated testing has been done”. This an extremely unhelpful way of thinking about testing work. Testing is neither manual nor automated, and the word “manual” misleadlingly lumps together a bunch of ideas that muddle things up. Let’s do some unpacking of that.

Testing in the Realization frame may be experiential, performed in such a way that the tester’s encounter with the product is intended to be practically the same as that of some contemplated user. Testing in the Realization frame may also be instrumented, such that some intervening medium — a tool — alters the naturalistic encounter with the product to enable us to find problems that are hard to see in normal usage.

Testing in the Realization frame may include activity that is interactive, such that the tester is working with and observing the product directly, in real time. Other activities might be unattended for a time, as an experiment runs or data is collected under the control of some automated process or tool, to be analyzed afterwards.

Testing in the Realization frame tends to be exploratory, wherein the tester is applying agency, making choices about the testing work in real time, and responding to what he or she is learning, working without constraints. Testing in the Realization frame might sometimes be scripted and highly formalized, especially when certain kinds of accountability and integrity of some aspects of the test process might be important to some client.

In the Realization frame, testing may be from time to time experiential or instrumented, interactive or unattended, exploratory or scripted. The key is that in the Realization frame, we’re dealing with a product that may be ready for deployment but that’s definitely ready for testing as a real thing — even if the product is going to undergo cycles of further development.

In the Realization Frame, among other things, we

- skeptically assess whether we’re done

- develop rich test data

- focus testing on suspected risk

- apply diverse models

- investigate mysteries

- tell compelling bug stories

Realization is testing towards the right end of the timeline. We would emphasize that “shifting left” is a fine idea — not least because it tends to support and accelerate testing in the Realization frame. However, “shift left” can’t replace Realization testing, because until Realization, notions about the success of “shift left” and the absence of problems in the product are only hopes and assumptions, not facts.

For some purposes, testing that has preceded Realization may be enough such that deeper testing for some risks isn’t necessary. Overall, but especially in the Realization frame, the goal is is to make testing responsibly deep — thorough enough to find elusive and important problems — but not obsessively deep. One could say that the object of the game — for Rapid Software Testing, at least — is to make shallow testing deeper and deep testing cheaper.

Above, I’ve noted advocates and enthusiasts who tend to emphasize focus and attention on each frame: quality coaches for the Intention frame; developers for the Discipline Frame; DevOps people for the Testability frame (and of course, there are people who attend to more than one). When I identify these kinds of focus, it’s not meant to be disparaging or dismissive of them, nor of the people who apply them. On the contrary; attention to each one of those frames is a good thing. The point is that sometimes those perspectives can displace attention on the Realization frame.

The Realization frame generally gets the most attention from people (like me) who are focused on trouble, and on deep testing to find it. We see testing as experimentation, exploration, investigation, discovery, and the experience of interacting with a product in both naturalistic and instrumented ways. In the workplace, such people tend to be in a dedicated testing role. They’re people who are interested in getting their hands, eyes, and minds on a product that is in some sense complete; people who are fine with the idea of helping people to prevent bugs, but who are focused on finding bugs that have escaped everyone’s notice so far.

Who does testing in the Realization frame? Anyone can, as either a responsible tester or as a supporting tester. In Rapid Software Testing, we identify the role of the responsible tester: someone who “bears personal responsibility for testing a particular thing in a particular way for a particular project“. When there are dedicated testers on a team, they are most likely to be the primary people responsible for testing in the Realization frame. Without dedicated testers, there a risk that testing in the Realization frame is done only as part-time work by supporting testers — or not being done at all. (I’ll have more to say about who performs testing work in the different frames in subsequent installments of this series.)

Everything in this series has been building to this set of heuristics for testing in the intersection between Agile (or agile) software development and the Rapid Software Testing Namespace.

The four frames represent a lens through which we can examine and discuss testing work while reducing opportunities for the conversation to pear-shaped. Many arguments seem to be about conclusions, when they’re really about premises. When we’re talking about testing and we seem to be in disagreement, it might be a good idea to ask questions about what kind of testing work we’re talking about — what part of the lens we’re looking through, or what frame the testing is in.

The lens isn’t static, though; it’s like a lens on a sophisticated camera; we can vary the zoom, the aperture, and the depth of field. We’ll talk about that in the next installment of this series.

I did not understand the first 2 parts very well I have to admit, but now I have read this one everything kinda falls into place perfectly.

So there might be something with the way you have gained the knowledge vs the best chronological order to explain the holistic view about it. I´m really really glad with this blog installment and looking forward to the next installment about the zoom lens now!

I agree fully to the middle section. Except maybe a bit with the middle on ‘avoid excessive formalization’. What I have seen is that people skip the Definition of Done for example. Which it’s effect can be really bad for the software testing process, especially the Functional Testing part and the Test Process aspects in general. Sometimes testers don’t have enough voice/power. Keeping the things formal (on previous agreed upon test processes/steps) can help in some cases. The excessive, okay… but my experience is that it is in far more times actually not formal enough about the test processes. So careful with that.

I’d rather say: ‘Keeping the correct balance in formalization.’

Thank you for the comment, Jan-Michael.

I appeciate the suggestion and the spirit in which it’s offered. That said, I would prefer to resist the temptation to frame things in terms of balance. Balance applies “some of this in proportion to some of that”, but to me, that assumes that a) one is in opposition to the other; and b) that some of both is necessary. I don’t believe that either (a) or (b) is necessarily true. I’d prefer to consider whether we need something at all.

Formalization provides a good example. Much of the time, you don’t want your testing to be too formalized — unless you want to waste time and miss bugs. Indeed, some times, it’s a good idea to abandon formalization, or to delay it until you’ve done enough informal work to decide what, if anything, needs to be formalized. Why is this important? Because lots of bugs don’t obey formalisms.

Now, that said, I also appreciate your use of safety language: “Keeping the things formal can help in some cases.” That’s true — and saying it that way also points us away from excessive formalization. Excessiveness literally means “too much”, which practically by definition is undesirable.

Hey Michael

Just wanted to say how much I am appreciating this series, in particular the evolutionary exposition of the framing process. This is helping to build a really clear and understandable model, that is justified and backed up along the way.

I am currently onboarding a couple of new (to the team) testers into my team, and they are just now starting to realise that we operate a bit differently from what they were expecting . This is a really useful series that I will be encouraging them to read

Thanks for writing it !