Vipul Kocher is a fellow whom I have known for a long time. I think we met in North America in the mid 2000s. I know I visited his company in Noida, New Delhi about 15 years ago, and spoke with his testers for an hour or so. On that occasion, I also visited his family and had a memorable home-cooked meal, followed by a mad dash in a sport utility vehicle through Delhi traffic to a conference at which I was presenting. I had dinner with Vipul last September at another conference in Sofia, Bulgaria. I’m starting with all this because I want to emphasize that Vipul and I have been on friendly terms for a long time; and I’m offering this (public) reply in good faith.

On September 30, 2023, Vipul posted this interaction with Bard on LinkedIn. I encourage you to read the post yourself. In my last post, I promised to provide an analysis of Vipul’s experiment and report on my process. So, here we go.

Charter

Having read the post, I evaluated my mission with respect to Vipul’s words. He said, “I am calling this post as an experiment. Maybe you all can contribute tests specific to IARM and we try to figure out if we can coax similar tests from the AI models and what does it take to get those answers out. We can also call out the test ideas provided by the models which were really good. You can also suggest what else should be done or what else should be differently done!”

Notice that Viipul’s mission statement here leans towards the Supporter mindset: finding ways in which the product can be made to work. It’s true that in some cases, testers are assigned to find ways in which a product can be made to work. Most of the time, though — at least in the Rapid Software Testing space — the big deal for the tester is finding problems that matter before it’s too late. That involves challenging the product and our beliefs about it.

Notice, though, that Vipul’s mission statements avoids saying anything that even hints at looking for problems. As a tester and as a Critic, I wanted to start from that perspective, taking the last part of the assignment as the most important: “what else should be differently done”. So my charter is “Examine Vipul’s account of applying Google’s Bard to testing ; identify problems; identify ways in which I (and other people taking a Rapid Software Testing perspective) might assess output from Bard or other Large Language Models (LLMs).”

TD; DR (Summary)

The first thing I’d recommend is more attention to the Critic mindset: what could go wrong here? We must confront the possibility of problems in the technology; in people’s application of it; in people’s approaches to testing it; and in people’s approaches to testing generally. That’s our unique role as testers on a project: focusing on the possibility of trouble, where everyone else, in the builder’s mindset, is optimistically envisioning success.

The second thing I’d recommend for an experiment of this nature is a much more clean and rigourous approach to collecting the data from the experiment and making it available for analysis.

The third thing I’d recommend — if we really want to assess the capabilities and potential value of LLMs — is that experiments of this nature be performed in collaboration with people with expertise in the domains of interest: testing, banking, and LLMs themselves.

Activities

I went through loops of activities throughout my analysis, with each activity triggering new questions that in turn spurred new activities.

- I used text processing tools (a text editor; a word cloud generator; a tiny and simple text parsing tool that I cobbled together in a few minutes) to help with my analysis. (Since the original version of this post, I’ve extended the capabilities of this tool. That was very serious fun, and the tool will be useful for future work — more useful still as further analysis prompts more ideas.)

- I extracted Bard’s replies from the rest of the text so that I could process them separately from Vipul’s narrative and from his prompts.

- I created a cleaned-up version of Vipul’s transcription, and posted it here on my site.

- I applied points in Rapid Software Testing’s Heuristic Test Strategy Model.

- I also referred to the list of problematic syndromes that James Bach and I have been developing in our ongoing work in analyzing LLMs.

- I also used Bard as its own oracle, performing experiments with it, often using the same prompts that Vipul used.

- I wrote text describing my work and my findings.

- I consulted with James on my findings, and obtained and applied the results of his review.

- I periodically re-evaluated the mission and threats to validity.

This last point is a good place to start. Let’s look at some of the serious problems with the validity of Vipul’s experiment.

Threats to Validity

Alas, Vipul seems to have not provided a complete and accurate account of his conversation with Bard. There are serious problems in his transcription that could lead to misinterpretation — and which helped to mislead me for a considerable amount of time.

At the top of his post, Vipul declared several formatting conventions that he intended to use. A close reading of the output suggests that in editing and formatting the responses, Vipul appears to have omitted a prompt or two, misidentified one of his own reactions as Bard’s, deleted some of Bard’s responses as redundant, and duplicated others that he found impressive. Sorting through all this was time-consuming and a little frustrating.

For analysis, I found had to create a repaired version of the transcription. I get it; people make mistakes in transcription and editing. (That’s why we have testers.) My purpose in saying this is not to castigate Vipul, but to point out something about The Secret Life of Testing: testers have to deal with what they get: an imperfect product; incomplete data; poteitally misleading reports from other people. What seems simple and straightforward to the client can turn out to be problem-laden, and dealing with problems can take time and effort.

The post was written to LinkedIn on September 30, but the date of his interaction with Bard isn’t specified. Bard was updated 2023.09.27. Did Vipul’s interactions take place before the 27th or after it? Would that matter? Will future updates to Bard invalidate previous attempts at testing it?

This highlights a very troubling difference between LLMs (any software built on machine learning algorithms) and software designed and built by human programmers: the software generated by ML is opaque; so is the process of generating it; and so are the differences between one version and the next.

Another validity problem is that replies from LLMs are variable by design. (See https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/ and https://benlevinstein.substack.com/p/how-to-think-about-large-language). LLMs produce output stochastically, with the goal of making the text more “creative” or “human-like”. I tried to repeat some of what Vipul said he did, beginning with the same prompt and graphic that Vipul said he used. In the first case, Bard’s responses appeared in a significantly different format from the ones Vipul supplied. In a subsequent attempt, the output was very similar to the output that Vipul got.

Yet another problem is that evaluation of the quality of Bard’s output depends on expertise in at least three domains: testing (and the evaluator’s model of it), banking, and interaction with LLMs. The prompts that Vipul offered to Bard are simple; certainly too simple for the task of analyzing and testing a banking application.

All of these problems make things complicated: are the weaknesses that I perceive in Bard’s output due to errors in the transcription? Is Bard’s ouptut unhelpful due to the calibre of the prompts? Are they intrinsic to Bard, or are they problems with LLMs generally? The answers matter. A report on the usefulness of the tool depends on the reliability of the account. The power and usefulness of any class of tool is to some degree a function of the expertise, skill, and judgment of the person applying it; the version of the tool; the input that gets supplied to it; the product under test; and so forth.

Analysis

Based on the prompts that Vipul supplied, Bard’s output is incurious, redundant, vacuous, and negligent.

In a Context-Driven approach we’d question the idea that there is any one first responsibility for a tester — but if there could be one, it would not likely be “create test cases”. Early responsibilities for a tester, though, would include negotiating the the mission of testing; learning about the domain (and how to learn about it); learning about factors that would enable or constrain the mission. In other words, like the old joke about asking directions in Ireland: “How do you get to Cootehill?” “Ah, well, now, I wouldn’t start from here.” Vipul starts from there.

Since Vipul asked for test cases, Bard’s replies are pointed immediately towards procedures. There is no regard for context, nor coverage of the elements of the product, nor specific quality criteria. Of course, this makes sense: ask an LLM for test cases, and you’ll get test cases. What you won’t get is pushback or questions that a skilled tester would ask. Like this:

I know testing this product is important to you, and I want to help you, but I don’t believe that I can provide you with a responsible answer to your request without some help from you. Could I ask some questions, please?

First, please tell me about this product.

Am I a tester for InfoSys, or for some other business or agency? Might I be testing on behalf of, say, a regulator?

The screen seems to refer to Interest Adjustment Register Maintenance (IARM); is that feature one you’re interested in specifically, or is there more to this?

You’re asking me to write test cases for the screen, but I suspect there’s a lot more to the assignment than that. That is, I assume that we’re testing the functions and features that follow from actions that people perform using this screen, right?

Assuming that your answer is “Yes; right,” please help me to understand the system around the screen.

Is there reference information available for IARM that I can have a look at?

Who are the intended customers or users of this product?

What dimensions of quality might be more significantly important to them? To you?

I see that the product exists. Is it a new product? It isn’t, is it. What might have happened or changed (in the IARM? or in some related system?) that suggests that we should be testing it?

Am I the only tester here? Has anyone else tested this? If so, can I see their results or reports?

It appears that this product is running in something called the DOP Certification Environment. Would that be a safe place for me to experiment or play with the product for a bit to get familiar with it? If not, could you point me to one?

Do you have any particular concerns, problems, or risks that you’d like me to focus on?

Bard did not respond with anything like this. This highlights a problem with LLMs: they are incurious. They don’t ask questions or seek clarification. They don’t think or reason. They’re not designed to do any of that stuff. The responsiblity for doing that lies with the tester. Instead of raising questions, LLMs will tend to placate the user by supplying an answer. It doesn’t matter if the answer is right; it matters that the answer sounds good.

Redundancy

When asked almost the same question, LLMs will tend to placate by supplying almost the same answer, which leads to redundancy that isn’t really helpful. A skilled human tester, asked the same question several time, would probe for problems of misinterpretation. (“Ummm… I thought I answered that. Can you help me understand why you’re asking that again?”)

Bard’s answers are repetitious because the input is repetitious. Three of the five prompts in Vipul’s account are virtually the same:

“Write test cases for this banking software screen. The software is finacle from infosys.”

“Give detailed test cases for Interest Adjustment Register Maintenance”

“Write test cases for An IARM entry to correct an overcharge of interest on a customers account”

This helps to explain the redundancies in the output. Out of the 107 lines of Bard output that Vipul provided, it seems that many lines are repeated exactly.

3 repeats of “Verify that the user can access the IARM screen.”

2 repeats of “Verify that the system can generate reports on IARM activity.”

2 repeats of “Try to create a new IARM entry with invalid data.”

2 repeats of “Try to update an existing IARM entry with invalid data.”

2 repeats of “Try to delete an IARM entry that is associated with other transactions.”

2 repeats of “Verify that the user can view the history of IARM entries.”

2 repeats of “Verify that the user can export IARM entries to a file.”

2 repeats of “Verify that the user can search for IARM entries by various criteria.”

2 repeats of “Verify that the system generates accurate error messages when the user makes mistakes.”

2 repeats of “Verify that the system performs well under load conditions.”

2 repeats of “Verify that the system calculates the interest adjustment amount correctly.”

2 repeats of “Verify that the system can handle complex interest adjustments involving multiple accounts and currencies.”

2 repeats of “Verify that the system can integrate with other banking systems, such as the core banking system and the general ledger system.”

2 repeats of “Verify that the system can be audited to ensure that all IARM transactions are accurate and compliant.”

And Vipul notes twice that he had already removed duplication from an already ponderous set of output!

By the consistency of the answers, one could infer from this that Bard is using a relatively low temperature setting. (Temperature governs the variability of an LLM’s reply.) However, we don’t know what Bard’s tempature setting is — a problem of opacity about the settings for the tool.

Vacuousness

The test cases that Bard provides are focused on confirmation and demonstration. Of the 107 lines of Bard’s output, 45 begin with “verify that”. Some of these lines are banal:

“Verify that the system calculates the interest adjustment amount correctly.”

Is that a test case? Or is that a requirement, with the words “verify that” stapled in front? Is there any notion of what might constitute a correct or incorrect result? Is there an oracle (a means by which we might recognize a problem)? Why not save a bunch of time and say “just make sure everything works, okay?”

Most of those lines are both banal and vague:

“Verify that the system can integrate with other banking systems, such as the core banking system and the general ledger system.”

How is the IARM related to the core banking system and the general ledger system? Does Bard have any insight on this, or is it simply generic text associated with “test cases” and “banking”?

Probably the latter. One reason for banality in responses Bard and other LLMs is that they have been trained on data about testing that’s available on the Web. At best, test cases on the Web are inclined to be general, and not specific to a given product. Even given that, the bulk of examples of test cases on the Web are trite; bromide-laden; happy-path; risk- and context-free. And test cases are not testing!

Some lines are banal and vague and might be hallucinations.

“Verify that the system can handle complex interest adjustments involving multiple accounts and currencies.”

Which multiple accounts? Does this system even deal with multiple currencies? Or is this just another instance of generic test cases associated with banking? Of course, we don’t know, because it’s not clear where Bard is getting this suggestion; more opacity.

At one point, Vipul says “Time to figure out how deep is the rabbit hole.” — and then asks for more test cases.

“Interest Adjustment Register Maintenance – write some scenarios that you would like to test as a banking clerk who is doing user acceptance testing of the software.”

…whereupon Bard responds with a bunch more test cases.

Yet as Vipul says in his narrative, “I am not sure what is IARM so I ask Bard for more details on the concept”. When it comes to finding out how deep the rabbit hole might be, his next prompt is a much better start:

What is Interest Adjustment Register Maintenance entry?

Or at least it would be a good start if it contained more detail, and if we didn’t run into the problem of…

Hallucinations

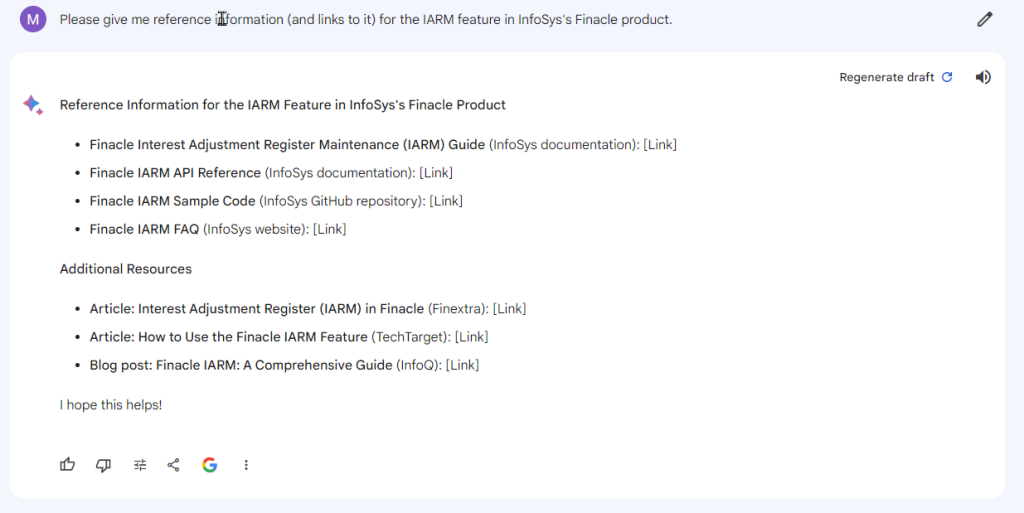

Vipul’s prompt seemed vulnerable to a common tendency is LLMs: Just Making Stuff Up. I went to Bard myself, and asked “Please give me reference information (and links to it) for the IARM feature in InfoSys’s Finacle product.”

Bard replied with this:

Here’s the text of the reply, copied verbatim:

Reference Information for the IARM Feature in InfoSys’s Finacle Product

- Finacle Interest Adjustment Register Maintenance (IARM) Guide (InfoSys documentation): [Link]

- Finacle IARM API Reference (InfoSys documentation): [Link]

- Finacle IARM Sample Code (InfoSys GitHub repository): [Link]

- Finacle IARM FAQ (InfoSys website): [Link]

Additional Resources

- Article: Interest Adjustment Register (IARM) in Finacle (Finextra): [Link]

- Article: How to Use the Finacle IARM Feature (TechTarget): [Link]

- Blog post: Finacle IARM: A Comprehensive Guide (InfoQ): [Link]

I hope this helps!

Note that the reply ends with “I hope this helps!” Well… it doesn’t help much, since none of the [Link]s are actual links; just the word “Link” in square brackets.

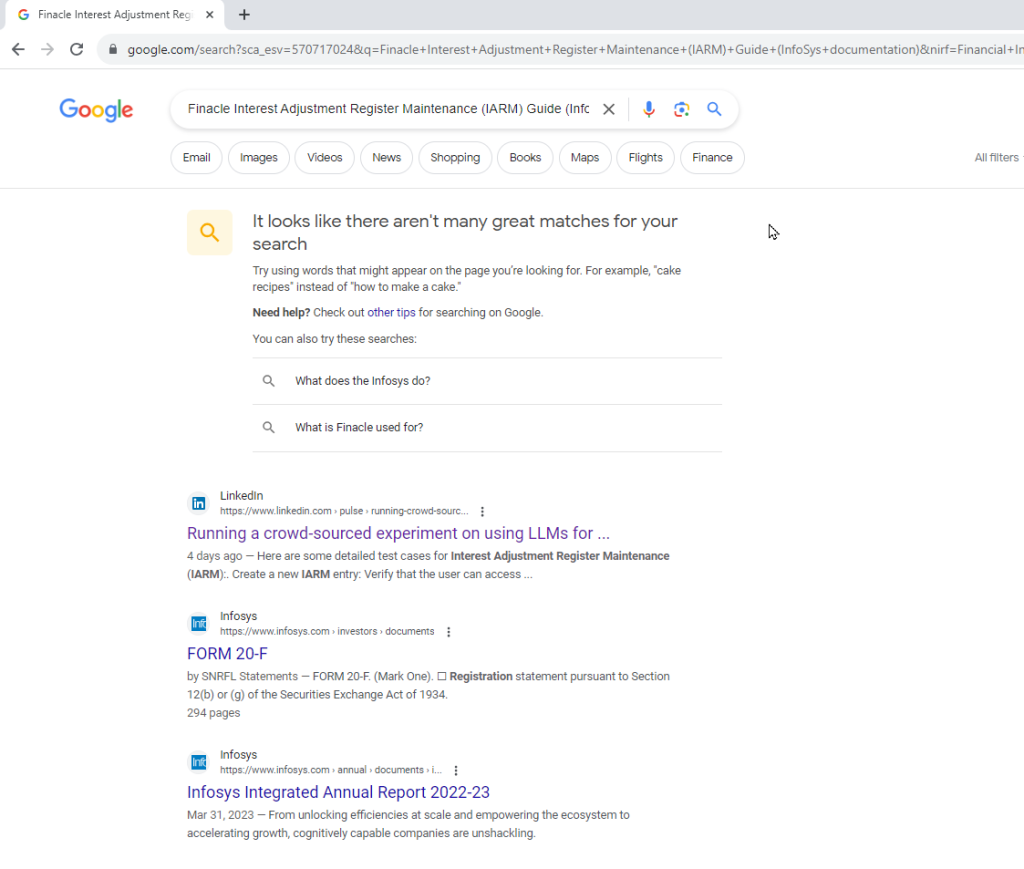

That first entry is “Finacle Interest Adjustment Register Maintenance (IARM) Guide (InfoSys documentation)” When I cut and pasted that into Google, I got this:

Notice: Google can’t find a reference that matches the search string. But as its first response, it provides a link to Vipul’s experiment!

This is very bad. LLMs are trained on data from the internet, and produce unreliable data stochastically based on what’s there. This leads to a feedback loop, such that LLMs internet will very shortly be trained on the unreliable output from LLMs. This runs the risk that people wanting to do research based on facts will find that facts are being drowned out by noise. I have no reason to believe that LLMs or any other form of AI will take over the world. But they stand a very good chance of making internet search unusable.

How about another seach term? The second link-that-isn’t-a-link , “Finacle IARM API Reference (InfoSys documentation)”, eventually leads me to InfoSys’s API documentation, which is behind a registration wall.

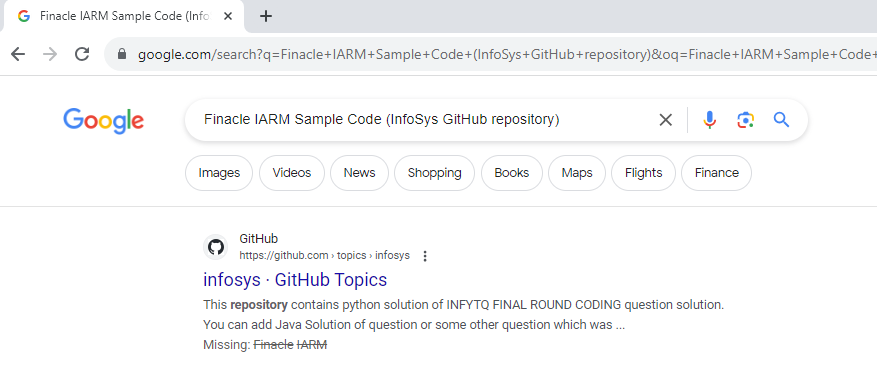

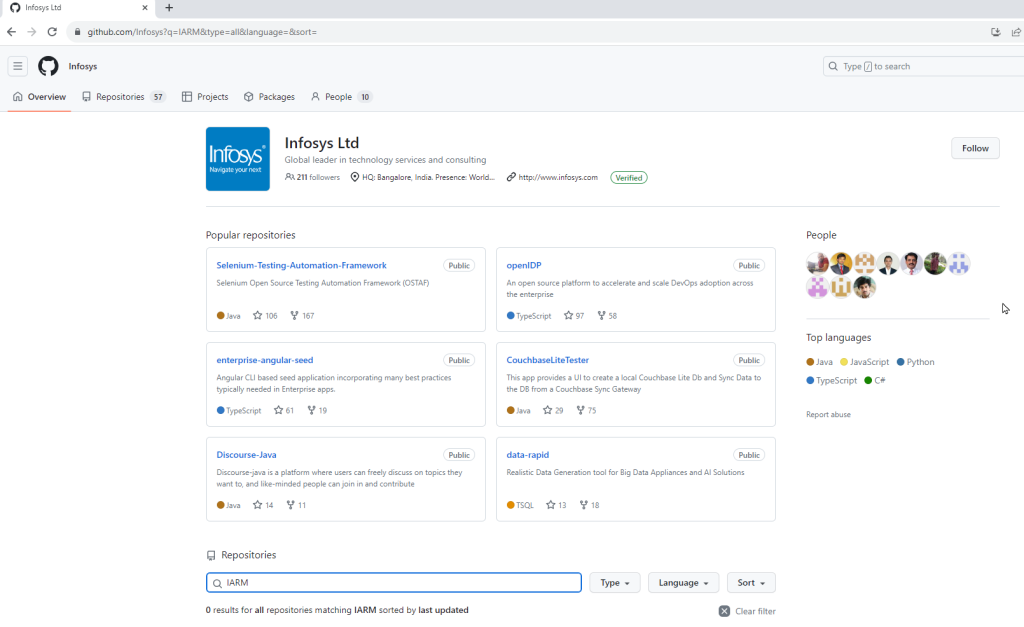

How about “Finacle IARM Sample Code (InfoSys GitHub repository)”?

Notice: Finacle IARM is missing from the search results. And when I go to the link…

0 results for IARM.

So here’s another problem with opacity: one possibility is that there is an actual Finacle Interest Adjustment Register Maintenance (IARM) Guide somewhere in Bard’s training data. But if that’s so, and if Bard could get at it, why can’t I? Is Bard getting access to stuff that’s proprietary to InfoSys? If there’s stuff not available on the regular Web, but that is available on the Dark Web, does Bard have acccess to that? Any of those would be rather bad.

I suspect, though, that there’s a simpler explanation: everything that Bard is suppying here is hallucination.

Laziness (or Blindness?)

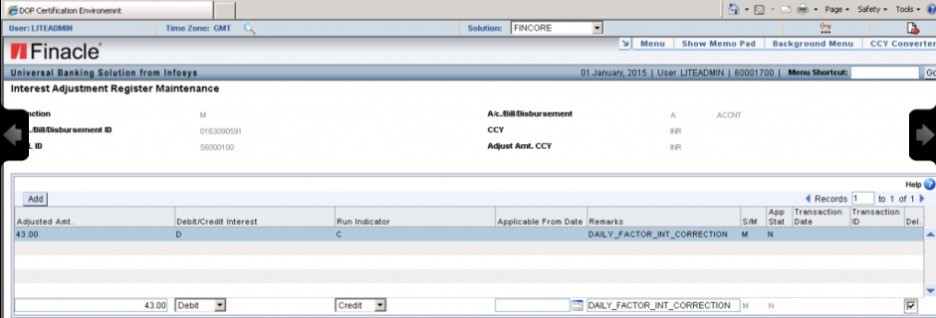

In the first prompt, Vipul supplies this image.

It’s not clear where Vipul got the image. It seems to be a cropped version of an image on the same site that Bard links to in its first reply. Did Vipul point Bard to that site in a part of the conversation we didn’t see? Did Vipul grab the image from that site himself, and then crop it? We don’t know. (I hope Vipul can help us by letting us know.)

And it’s not clear (more opacity) where Bard is getting its data. Here’s the beginning of the reply to Vipul’s first prompt.

Here are some test cases for the Finacle banking software screen from your image. (My emphasis, there.)

One might guess that there’s an optical character recognition step in the process of interpreting the input and preparing the output, and that’s how Bard develops its focus on Interest Adjustment Register Maintenance (IARM appears in 76 of the 107 lines of text in Bard’s output). Yet if an OCR function is recognizing “Interest Adjustment Register Maintenance”, why does it ignore the other fields and data values that are plainly visible or that can be inferred easily? I can see, offhand,

- Function,

- A/c./BillDisbursement ID

- SOL ID

- A/c./BillDisbursement

- CCY

- Adjust Amt. CCY

…and that’s just in the window in the middle of the image. There are also columns and values in the table below. There are fields and dropdowns at the bottom (Debit, Credit, DAILY_FACTOR_INT_CORRECTION). To the left of the latter, there’s an odd little icon, and an empty box that appears to be a text input field. I see I see User LITEADMIN, which implies a variety of user roles; what might they be?

The existence of these things on the screen suggests a complex product underneath. There are plenty of variables hinted at by the user interface, and we’d have to be that they’re interdependent.

At that, Bard is either blind to the fields on the screen, or too “lazy” to include anything to do with them in its list of test cases. I suppose that’s not an exclusive OR.

Overall Approach

The Critic mindset requires us to look for trouble. It’s very helpful to test strategy, design, and performance of testing to point the periscope to where the trouble might be — and bthat starts with talking about problems and risk.

Vipul’s prompts don’t help a tester with test strategy, and Bard doesn’t either. There is no instruction or advice to the tester to look for problems, nor on how to look for problems, nor what might constitute a problem. In fact, none of the words “risk”, “bug”, “defect”, or “oracle” show up at all. “Problem” does:

“IARM entries are important because they ensure that customers are charged the correct amount of interest on their accounts. IARM entries are also used to track and report on interest adjustments, which can help banks to identify and address potential problems.”

It sure would be nice to have examples or instances of those problems so testers might consider looking for them, but there’s not of that from Bard’s description.

“Error” appears in three places. Two are in test cases:

“Verify that the system generates accurate error messages when the user makes mistakes.”

“Verify that the system generates accurate error messages when the user makes mistakes.”

One is in the description of IARM’s purposes:

“Correcting an error in the interest calculation”

When we steer testing towards verification, we’re attempting to show the the product can work. The expression “verify that” appears in 45 of the 107 lines of Bard’s output. The things to be verified are not at specific, but hopelessly general. The “test cases” are essentially very vague requirements statements, applicable to practically any system that processes data, with the words “Verify that” in front of them. You can try this yourself: go to Bard’s output, search for “IARM entry” and replace it with “record”, and see how many of the test cases still make sense.

The coverage offered by the test cases that Bard supplies is shallow. There is no indication of what specific functions or subfunctions might be exercised.

“Data” is mentioned; so is “invalid data”:

Verify that the user can create a new IARM entry with valid data.

Verify that the user can update an existing IARM entry with valid data.

Verify that the user cannot create or update an IARM entry with invalid data.

Try to create a new IARM entry with invalid data.

Try to update an existing IARM entry with invalid data.

Try to create a new IARM entry with invalid data.

Try to update an existing IARM entry with invalid data.

Try to create a new IARM entry with invalid data (e.g., a negative interest amount).

But all this is enormously vague. There’s only one example of something that might be invalid, and it refers to one field. There’s no reference to where data might be described, and no suggestion to the tester to go find it out.

There is no mention of testing on varying platforms, display resolutions, browsers… The coverage of usability was, alas, redacted from Vipul’s account. There is reference to performance…

“Verify that the system performs well under load conditions”

…but what does that really mean, specifically? Again, this is another instance of a requirement with the words “Verify that” stapled on.

All this is to be expected when the prompts are so strongly focused on test cases. This is a very Routine-School prompt, and a Routine-School assignment for the tester, bordering on the irresponsible.

I acknowledge that “irresponsible” is a pretty strong word here, since Vipul is not performing this work in the context of a real testing assignment. But Vipul is also suggesting that Bard is providing good and useful material for testing. Moreover, Vipul’s claims are:

AI will be able to do in near future what a very large percentage of testers do today, for test design and execution. In fact, I had predicted that it will happen, way back in 2008/2009.

It is now just a matter of a little time and a little work on training the models with the right set of data for it to be able to do a good job of test idea generation. Will it surpass geniuses? Possibly not. Not at least in the next 5 years but I believe that too will happen sooner or later.

My response is that I see no evidence of Vipul’s claims being anything other than a pipedream unless one considers “what a very large percentage of testers do today” as the sort of weak stuff that Bard is producing. Here’s the tough reality about that: there are indeed plenty of testers who are doing work that consists of pasting the words “Verify that” in front of statements found in requirements documents.

If a tester showed up at an interview presenting Bard’s output as sample work, I would have some pretty serious questions about how the tester had reasoned about his/her mission, reflected on it, and questioned it. If I were a manager new to that tester, I would ask that tester to put down the test cases and back slowly away from them. Then, as an intervention to help break the test case addiction, I’d ask the tester prepare a product coverage outline, a risk list, and a test strategy; and then we can talk about how to test the product. And I would train the tester, if needed, to do so.

Were I analyzing that tester’s management on behalf of a client, I’d have some pretty serious questions about the state of test management in that organization. Once again, test cases are not testing.

As above: are the weaknesses that I perceive in Bard’s output due to errors in the transcription, the calibre of the prompts, or are they intrinsic to Bard, or are they problems with LLMs generally? The answer, it seems, is all four.

This experience suggest to me that LLMs will present no end of time and trouble for serious testers. And the real trouble is, most Supporters won’t notice that.

Michael, it’s a very extensive piece of Critique, thank you. From the evidence you supplied its obvious (to me, a cdt tester) that asking public LLM, trained on internet data, simplistic questions is rather useless. I foresee two directions on where this might be developed: LLM trained on specific validated data sets that include more context. The questions about the specific product cannot be asked on the internet, but there is certainly a domain knowledge documentation, problem reports of the past, some evidence on risk and risk mitigation, product and business overview presentations, decision making documentation and training — in the company which develops this product. So it’s the source.

Second direction is actually taking the LLM into the role of a skilled tester. What I see is lacking in Vipul’s approach, is exactly that. You need to be skilled in order to ask the questions about context. The questions which you notice Bard is not asking – it will not, unless you prompt it to raise them. I have seen this behavoir “requirement sentence turned into testcase” with slapping “Validate that” in front – from the real people. It’s unprofessional and simply awful. But it exists and populates internet in tons. But there is also RST and your and James articles out there, on the internet. There are critical thinking articles, principles of logic explained, etc. LLM doesn’t have the role by default. If its asked to find garbage in garbage- it will do that. Working with prompt to get the behavior and information needed – it’s a skill. Ironically, LLM will not replace ignorant tester with the skilled one, if the ignorant tester will be driving it. It will not make you smarter. You can make it smart.

The interesting challenge of Tomorrow is to raise skilled engineers to collaborate with LLMs.

It’s obvious (to me, a cdt tester) that asking public LLM, trained on internet data, simplistic questions is rather useless.

Indeed. And yet this is being touted with great excitement and little sobriety.

It’s certainly possible that with more context in both the training data and the prompt, one might get more useful results. The catch that I can anticipate is that evaluating risk analysis requires not only data (from the Web and/or from the organization), but also actual expertise and social competence.

I’m not quite sure it’s right to say that, with prompting, we can make LLMs smart, as such. We might be able to get them to produce results more refined, more to our liking; to our taste, as it were. But that requires us to be more discerning ourselves — so, in a way, back to square one.

Your thoughtful analysis of the crowd-sourced experiment is insightful and well-articulated. It sheds light on the potential of LLMs in testing, offering a comprehensive and engaging perspective. Great work!