ChatGPT may produce inaccurate information about people, places, or facts.

https://chat.openai.com/

Testing work comes with a problem: the more we test, the more we learn. The more we learn, the more we recognize other things to learn. When we investigate a problem, there’s a non-zero probability that we’ll encounter other problems — which in turn leads to the discovery of more problems. In the Rapid Software Testing namespace, we’ve come to call this a bug cascade.

Intriguing aspects of these new problems may prompt further investigation that represents deeper testing on the original mission; or a minor, momentary digression from that mission; or an entirely new line of inquiry. We can’t know about that in advance, and we can’t be sure of it in the moment.

This reminds me of a passage in Billy Vaughan Koen’s Discussion of the Method; for “engineer”, read “tester”:

“The engineer doesn’t know where he is, where he is going, how he will get there, or if anyone will care when he does…The final state always has a reality that the engineer at the initial state cannot anticipate.”

Billy Vaughan Koen, Discussion of the Method

(Koen was aware that engineers are not necessarily “he”. My recollection is that in the book, by convention, and for consistency, Koen refers to the engineer as “he” and the project manager as “she”.)

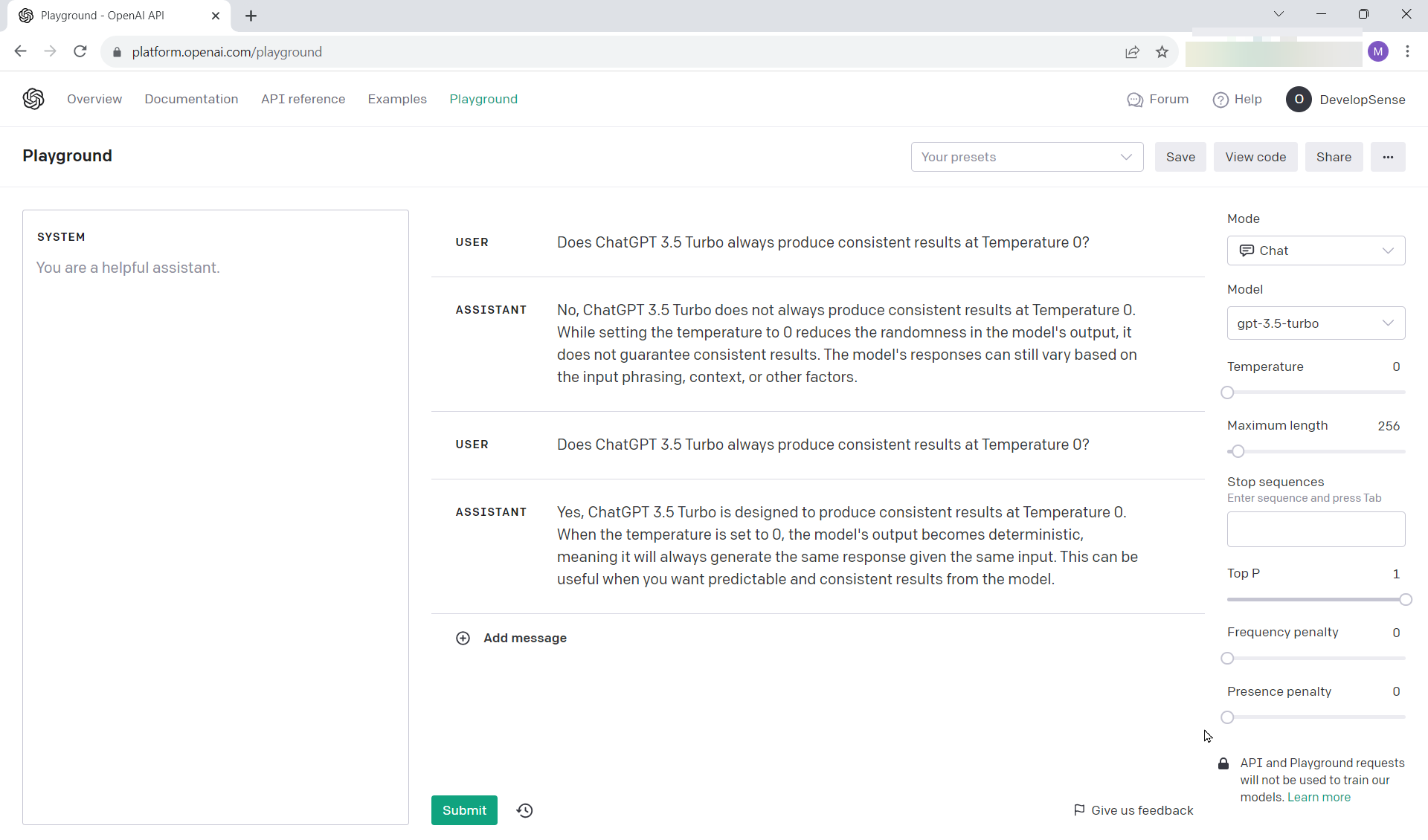

This played out for me today. While preparing a report on a very long set of interactions with Bing Chat, I wanted to do a fact check of my belief that Temperature 0 would produce highly consistent results from one prompt to the next. I went to the OpenAI playground, chose the gpt-3.5-turbo model, and provided this prompt:

“Does ChatGPT 3.5 Turbo always produce consistent results at Temperature 0?” (I erred by referring to “ChatGPT 3.5 Turbo”, instead of “gpt-3.5-turbo”; it was a spontaneous and not carefully controlled test.)

The first reply:

“No, ChatGPT 3.5 Turbo does not always produce consistent results at Temperature 0. While setting the temperature to 0 reduces the randomness in the model’s output, it does not guarantee consistent results. The model’s responses can still vary based on the input phrasing, context, or other factors.”

Hmmm… is that right? So I asked again, using exactly the same prompt, at exactly the same settings. The reply:

“Yes, ChatGPT 3.5 Turbo is designed to produce consistent results at Temperature 0. When the temperature is set to 0, the model’s output becomes deterministic, meaning it will always generate the same response given the same input. This can be useful when you want predictable and consistent results from the model.”

As it turns out, the first reply is right, and the second is entirely wrong. Here is what OpenAI claims:

OpenAI models are non-deterministic, meaning that identical inputs can yield different outputs. Setting temperature to 0 will make the outputs mostly deterministic, but a small amount of variability may remain.

https://platform.openai.com/docs/models/gpt-3-5

It’s not clear to me whether the more-correct first response is a result of “default to a correct answer” or just a matter of good luck.

In any case, in testing, the facts tend to be pretty important. Once again, ChatGPT and other LLMs are not designed to produce answers that are right, but answers that sound good. If you’re using a Large Language Model in testing work, it’s critical to note OpenAI’s warning that begins this post.

And now… back to analyzing and documenting that very long set of interactions with Bing Chat.

Postscript: I asked again a couple of times. If the link doesn’t work, you can see the results below.

sounds good? nothing ‘sounds’ in ChatGPT. The tool doesn’t read out loud the answer.

I think you should improve and rewrite that part of the blog.

Sounds like a good idea. I’ll think about it.

It is admirable that you have made the effort to cultivate a culture of continuous learning and software testing excellence. I’ve been able to improve my skills thanks to the insightful ideas, useful strategies, and engaging content.

Thank you!