If names be not correct, language is not in accordance with the truth of things.

Confucius, Analects

There are lots of problems in software development, but one of the bigger ones is muddled process talk that leads to muddled process thinking.

An email arrived in my inbox, touting an article titled The Shift-Left Approach to Software Testing.

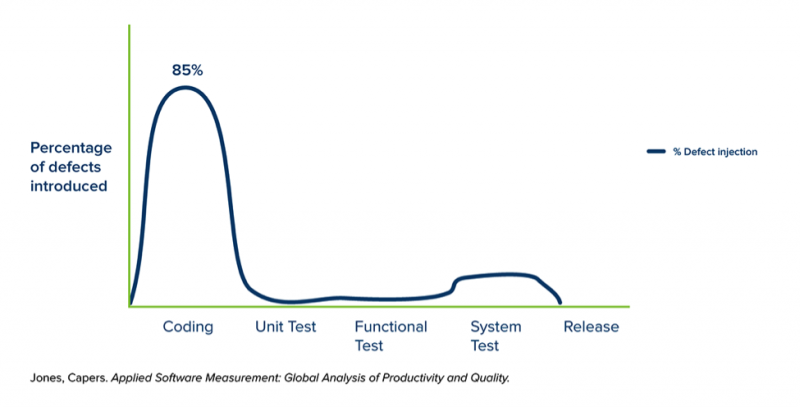

As it turns out, the article was written it 2018, and the source for the graphic above appears to come from a book written in 2008. Since the email arrived only the other day, I was briefly fooled into thinking that the content might be fresh — because there are so many articles and blog posts and conference presentations that have been recycling the same folklore for five years, fifteen years, and through my 35-year career in software.

Here’s a graphic from the article. What’s wrong with it?

Maybe you believe, as I do, that “defect” is a problematic term. (Here’s a blog post, even older than the article, that explains why.) If so, I agree, but that’s not what really bothers me about the article. So what else is wrong with it?

Here’s the biggest problem I see: whether you call them “defects”, bugs, errors, mistakes, issues, or problems, they’re not introduced or injected during unit testing, functional testing, or system testing.

Problems are introduced during development. Development work isn’t necessarily coding. The collecting and discussion and refinement of requirements is development work. The design of the product is development work too.

The author of the article says “Whether they make mistakes, misunderstand the requirements, or don’t think through the ramifications of a particular piece of code, developers introduce defects as the code is produced.” This is an example of what Jerry Weinberg called the Hot Potato Theory of Software Problems: whoever had the product last is responsible (that is, to blame) for the problem. Testers are often tossed the hot potato; in this case, it seems that it’s getting tossed to developers.

A developer is not always in a position to know whether someone’s description of a requirement is a complete, necessary, sufficient, accurate, up-to-date description. The “requirement” being described may not be an actual requirement for the product, but rather a choice, or a preference, or a guess.

Irrespective of the development process model, testing happens in response to various development activities: requirements development, architectural design, specification, coding… and fixing. The testing that happens in response to these activities may reveal problems that originated in any of the preceding ones.

Recognition of those problems may trigger new bursts of requirements development, design, specification, new coding, or fixing. That is: recognition of problems may trigger repairs to problems introduced in previous steps. Those repairs may be the cause of new problems, or may leave elements of the original problems unsolved, to be revealed by further testing, which in turn leads to more fixing.

Maybe that’s what the author of the article (or the author of the graphic) means: that “defects” are discovered through testing focused on units, on specific functions, or on the whole system; and new “defects” are introduced or injected by fixes that are responses to problems discovered by that kind of testing.

A similar pattern is evinced by the question “why is testing taking so long?”, or the mandate “we’ve got to reduce the time we spend on testing!”. Much of the time, testing isn’t taking very long at all. Instead, it’s fixing that’s taking the “extra” time. Moreover, that “extra” time isn’t really extra at all, for the most part. Instead, more often, it’s time that we didn’t take to study, analyze, challenge, and critique (that is, to test) earlier work.

We’re unlikely to get much better at software development unless we learn to keep our language and our concepts clear. Muddled process models and muddled process talk undermine our ability to think and communicate clearly, and to make progress.

Of course, suspicious statistics are problematic as well.

I think another dimension to consider is “not all problems are equal” . As a tester I like to push back a bit when having more Unit Tests is presented as being some sort of panacea that will deliver massive value in terms of cost and effort saved down the track. For a certain very specific class of problems, in very particular circumstances this might be true – but I think we get more value in terms of “shift left” in looking at where the really important problems are more likely to be found – and that in my experience is almost invariably gaps in our understanding, and miscommunications that occur well before coding starts. Possibly even before budget for a project is approved. Those are the problems that really cost us

A well thought through and understood product, with average code quality and spotty unit test coverage is far more likely to get to market on budget and on time than an incredibly well coded product with amazing unit test coverage that made a few too many un-validated assumptions when the product was conceived.

I really feel like this is a point we need to keep on making when talking about shifting left