Last night, my wife was out on an errand in our car. She parked it, entered the store, and came out again. She tried to start the car. It wouldn’t start.

She called home to consult with me. We tried a couple of things over the phone. We considered a couple of possible problems. From what I could tell, the starter motor wasn’t engaging. Not exactly a surprise, because I had noticed that the car had been a little reluctant to start over the last little while. We decided to call the auto club to have the car towed to our mechanic’s shop, close to our home.

When the tow truck driver arrived, he tried to start the car. It started. The tow was free, thanks to the auto club, so we decided to have it towed anyway.

I walked down to meet the driver at the mechanic’s shop, to pick up the key—the shop was closed, and there isn’t a drop box. The driver unloaded the car from the flatbed, started it, and parked it. He left the car running. I got in, turned it off, and then started it. Once again, the car seemed a little cranky. Nonetheless, it started.

We’re going to Montreal in a couple of days—a six-hour drive. We’ll need the car to get there, to get around the city, to drop in on some friends on the way back, and to get home. We’ve established that the car can work. Does that mean everything is okay, or would you have the mechanic take a look at the car?

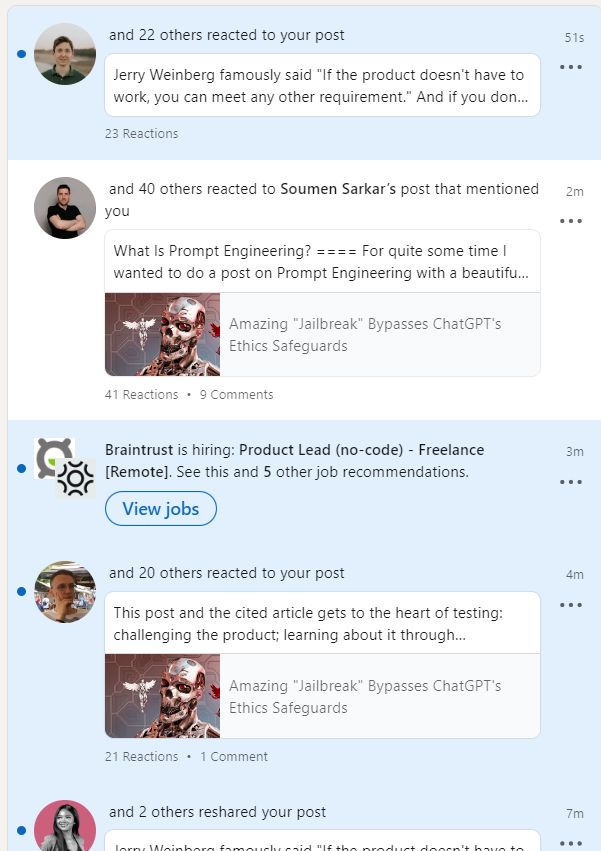

The other day, I was on LinkedIn. I noticed an interesting behaviour: account names were suddenly missing from my notifications (they usually appear beside the picture).

I wrote a brief post to note the issue — and in the post, I noted that a few minutes later, the names were back.

One correspondent replied that this was “pretty impressive fault tolerance and recovery if you think of it that way.”

Well… maybe. But as a tester, I don’t think that way. As a tester, I must focus on trouble; on risk; on the idea that I’m seeing evidence of a deeper problem.

As testers, we must remain alert to any symptom, anything that seems out of place, a hissing sound, a grinding sound, an inconsistency, missing text, or a starter that sometimes doesn’t work.

Today, reviewing my email backlog, I saw this:

This — missing user data from a different day — seems to be evidence of a more systemic problem. To a tester, the fact that some message notifications seem to be displayed properly is unremarkable. The important thing is that some don’t.

All this reminds me of Richard Feynman (the patron saint of software testers), and the appendix he wrote to the Report of the Presidential Commission on the Space Shuttle Challenger Accident.

Feynman noted that NASA officials took exactly the wrong interpretation from problems that had been observed on previous shuttle flights — erosion and blow-by. (Erosion refers to the degradation of the rubber O-rings that kept hydrogen and oxygen separate from one another; blow-by refers to the escape of gases through those degraded seals.)

The phenomenon of accepting for flight, seals that had shown erosion and blow-by in previous flights, is very clear. The Challenger flight is an excellent example. There are several references to flights that had gone before. The acceptance and success of these flights is taken as evidence of safety. But erosion and blow-by are not what the design expected. They are warnings that something is wrong. The equipment is not operating as expected, and therefore there is a danger that it can operate with even wider deviations in this unexpected and not thoroughly understood way. The fact that this danger did not lead to a catastrophe before is no guarantee that it will not the next time, unless it is completely understood. When playing Russian roulette the fact that the first shot got off safely is little comfort for the next. The origin and consequences of the erosion and blow-by were not understood. They did not occur equally on all flights and all joints; sometimes more, and sometimes less. Why not sometime, when whatever conditions determined it were right, still more leading to catastrophe?

In spite of these variations from case to case, officials behaved as if they understood it, giving apparently logical arguments to each other often depending on the “success” of previous flights.

Richard Feynman

Report of the PRESIDENTIAL COMMISSION on the Space Shuttle Challenger Accident

Appendix F: Personal Observations on the Reliability of the Shuttle

(emphasis mine)

Here, Feynman is looking beyond the technical problem and identifying a social and psychological problem. As Diane Vaughan puts it in her splendid analysis, the problem was the normalization of deviance:

The decision (to launch) was not explained by amoral, calculating managers who violated rules in pursuit of organizational goal, but was a mistake based on conformity — conformity to cultural beliefs, organizational rules and norms, and NASA’s bureaucratic, political, and technical culture.

Diane Vaughan, The Challenger Launch Decision

I worry that the software business hasn’t learned from NASA’s experience.

Dear testers, and dear developers: can work does not mean does work, and seems to work now does not mean will work later. A problem that appears briefly and seems to go away is evidence that we have an inconsistent system. That’s not an invitation to shrug; it’s a motivation to investigate.

The car’s at the shop. We’ll be getting it back tomorrow.

I once worked on a project developing mission critical emergency radio communications infrastructure. One of my testers noticed a barely audible ticking sound in the audio output of a prototype radio. To be honest, I couldn’t hear it, but when we hooked the device up to a scope, an anomalous signal was definitely there… We decided to investigate further…

It turned out that the digital signal processor in the device was periodically losing synchronization with the control software. Goodness knows how this would have manifested in the field, but lets just say absolutely no one was prepared to take the risk of going to market until it was fixed.

Yikes.

I guess the point as it relates to your post is – all our scripted tests were passing. There was no functional issue. As far as we could tell we had a fully featured working prototype. It would have been easy to ignore this issue, and if our mindset had been different and we were just ticking boxes – we could have let it slide. But by this stage exploratory testing was a big part of our process, and we encouraged active investigation. So we caught it

Definitely good of you to get your car checked!!

I also noticed on LinkedIn (5th Feb) that there was an issue with profile pictures not being shown. Not just mine, but people in my messages & network as well.

I emailed support to let them know, but by the time they tested it everything was working. It was working by then on my end, too. Their reply to my email suggested I try a few things, telling me what browsers they support, not to use incognito mode, etc. But the fact is, I’d been accessing LinkedIn every day from the same computer, same browser, without the issue happening… So, not a browser/device issue.

Anyway, was interesting to note around the same time you saw the ‘names missing’ bug!! Wonder what’s going on at LinkedIn… hopefully it is being looked into!!

And, on another note, my oven has been very occasionally not heating up. The gas works, the power is on, I think the ‘heating element’ is not reaching the required temperature to ignite the gas. This seems to be one of the first things that fails with this type of oven, from my research. It has happened about 5 times, but after 24 hours the oven is working again, so I never bothered my landlord. Until last week, when it wouldn’t heat up 2 days in a row.

Then, when the landlords came over to check it out, it worked of course (which is what I suspected it would do!). They probably felt I was wasting their time… at least they said if it happens again, text them so they can check it out. They will buy me a new oven rather than replacing the part, if it keeps malfunctioning. Very annoying to know there is a problem but not able to ‘prove’ it at the time I need to show it’s real!