Warning: this is another long post. But hey, it’s worth it, right?

Introduction

This is an experience report of attempting to perform a session of sympathetic survey and sanity testing on a “test automation” tool. The work was performed in September 2021, with follow-up work November 3-4, 2021. Last time, the application under test was mabl. This time, the application is Katalon Studio.

My self-assigned charter was to explore and survey Katalon Studio, focusing on claims and identifying features in the product through sympathetic use.

As before, I will include some meta-notes about the testing in indented text like this.

The general mission of survey testing is learning about the design, purposes, testability, and possibilities of the product. Survey testing tends to be spontaneous, open, playful, and relatively shallow. It provides a foundation for effective, efficient, deliberative, deep testing later on.

Sanity testing might also be called “smoke testing”, “quick testing”, or “build verification testing”. It’s brief, shallow testing to determine whether if the product is fit for deeper testing, or whether it has immediately obvious or dramatic problems.

The idea behind sympathetic testing is not to find bugs, but to exercise a product’s features in a relatively non-challenging way.

Summary

My first impression is that the tool is unstable, brittle and prone to systematic errors and omissions.

A very short encounter with the product reveals startlingly obvious problems, including hangs and data loss. I pointed Katalon Record to three different Web applications. In each case, Katalon’s recording functions failed to record my behaviours reliably.

I stumbled over several problems that are not included in this report, and I perceive many systemic risks to be investigated. As with mabl, I was encountered enough problems on first encounter with the product that it swamped my ability to stay focused and keep track of them all. I did record a brief video that appears below.

Both the product’s design and documentation steer the user—a tester, presumably—towards very confirmatory and shallow testing. The motivating idea seems to be recording and playing back actions, checking for the presence of on-screen elements, and completing simple processes. This kind of shallow testing could be okay, as far as it goes, if it were inexpensive and non-disruptive, and if the tool were stable, easy to use, and reliable—which it seems not to be.

The actual testing here took no more than an hour, and most of that time was consumed by sensemaking, and reproducing and recording bugs. Writing all this up takes considerably more time. That’s an important thing for testers to note: investigating and reporting bugs, and preparing test reports is important, but presents opportunity cost against interacting with the product to obtain deeper test coverage.

Were I motivated, I could invest a few more hours, develop a coverage outline, and perform deeper testing on the product. However, I’m not being compensated for this, and I’ve encountered a blizzard of bugs in a very short time.

In my opinion, Katalon Studio has not been competently, thoroughly, and broadly tested; or if it has, its product management has either ignored or decided not to address the problems that I am reporting here. This is particularly odd, since one would expect a testing tools company, of all things, to produce a well-tested, stable, and polished product. Are the people developing Katalon Studio using the product to help with the testing of itself? Neither a Yes nor a No answer bodes well.

It’s possible that everything smooths out after a while, but I have no reason to believe that. Based on my out-of-the-box experience, I would anticipate that any tester working with this tool would spend enormous amounts of time and effort working around its problems and limitations. That would displace time for achieving the tester’s presumable mission: finding deep problems in the product she’s testing. Beware of the myth that “automation saves time for exploratory testing”.

Setup and Platform

I performed most of this testing on September 19, 2021 using Chrome 94 on a Windows 10 system. The version of Katalon Studio was 8.1.0, build 208, downloaded from the Katalon web site (see below).

During the testing, I pointed Katalon Studio’s recorder at Mattermost, a popular open-source Slack-like chat system with a Web client; a very simple Web-based app that we use for an exercise that we use in out Rapid Software Testing classes; and at CryptPad Kanban, an open-source, secure kanban board product.

Testing Notes

On its home page, Katalon claims to offer “An all-in-one test automation solution”. It suggests that you can “Get started in no time, scale up with no limit, for any team, at any level.”

I started with the Web site’s “Get Started” button. I was prompted to create a user account and to sign in. Upon signing in, the product provides two options: Katalon Studio, and Katalon TestOps. There’s a button to “Create your first test”. I chose that.

A download of 538MB begins. The download provides a monolithic .ZIP file. There is no installer, and no guide on where to put the product. (See Bug 1.)

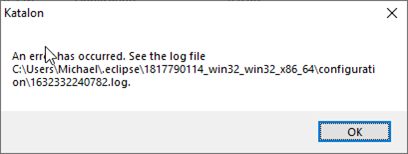

I like to keep things tidy, so I create a Katalon folder beneath the Program Files folder, and extract the ZIP file there. Upon starting the program, it immediately crashes. (See Bug 2.)

The error message displayed is pretty uninformative, simply saying “An error has occurred.” It does, however, point to a location for the log file. Unfortunately, the dialog doesn’t offer a way to open the file directly, and the text in the dialog isn’t available via cut and paste. (See Bug 3.)

Search Everything to the rescue! I quickly navigate to the log file, open it, and see this:

java.lang.IllegalStateException: The platform metadata area could not be written: C:\Program Files\Katalon\Katalon_Studio_Windows_64-8.1.0\config\.metadata. By default the platform writes its content under the current working directory when the platform is launched. Use the -data parameter to specify a different content area for the platform. (My emphasis, there.)

That -data command-line parameter is undocumented. Creating a destination folder for the product’s data files, and starting the product with the -data parameter does seem to create a number of files in the destination folder, so it does seem to be a legitimate parameter. (Bug 4.) (Later: the product does not return a set of supported parameters when requested; Bug 5.)

I moved the product files to a folder under my home directory, and it started apparently normally. Help/About suggests that I’m working with Katalon Studio v. 8.1.0, build 208.

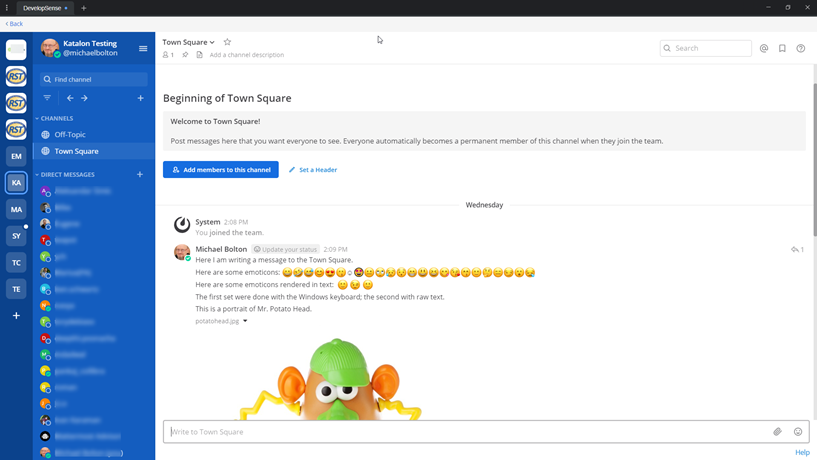

I followed the tutorial instructions for “Creating Your First Test”. As with my mabl experience report, I pointed Katalon Recorder at Mattermost (a freemium chat server that we use in Rapid Software Testing classes). I performed some basic actions with the product: I entered text (with a few errors and backspaces). I selected some emoticons from Mattermost’s emoticon picker, and entered a few more via the Windows on-screen keyboard. I uploaded an image, too.

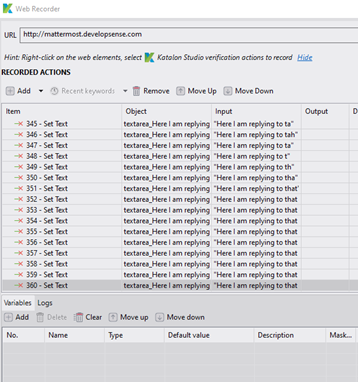

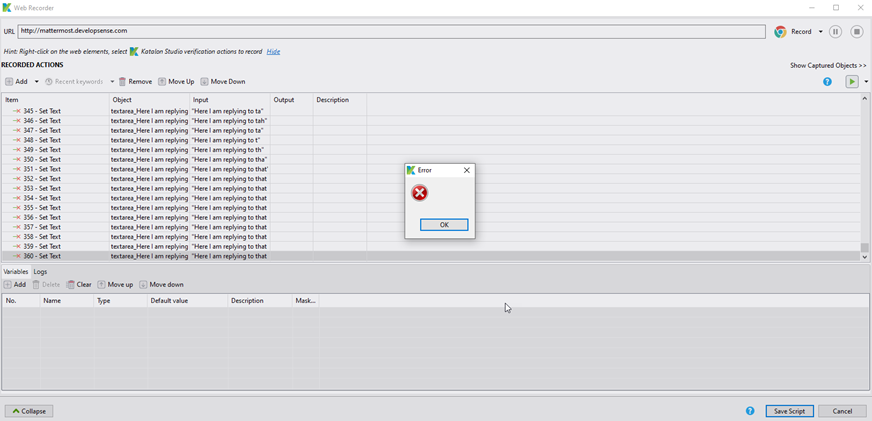

I look at what Katalon is recording. It seems as though the recording process is not setting things up for Katalon to type data into input fields character by character, as a human would. It looks like the product creates a new “Set Text” step each time a character is added or deleted. That’s conjecture, but close examination of the image here suggests that that’s possible.

Two things: First, despite what vendors claim, “test automation” tools don’t do things just the way humans do. They simulate user behaviours, and the simulation can make the product under test behave in ways dramatically different from real circumstances.

Second, my impression is that Katalon’s approach to recording and displaying the input would make editing a long sequence of actions something of a nightmare. Further investigation is warranted.

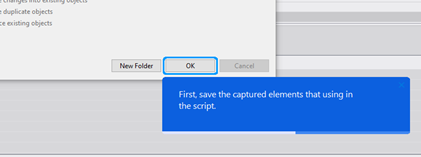

Upon ending the recording activity, I was presented with the instruction “First, save the captured elements that using in the script.” (Bug 6.)

This is a cosmetic and inconsequential problem in terms of operating the product, of course. It’s troubling, though, because it is inconsistent with an image that the company would probably prefer to project. What feeling do you get when a product from a testing tools company shows signs of missing obvious, shallow bugs right out of the box? For me, the feeling is suspicion; I worry that the company might be missing deeper problems too.

This also reminds me of a key problem with automated checking: while it accelerates the pressing of keys, it also intensifies our capacity to miss things that happen right in front of our eyes… because machinery doesn’t have eyes at all.

There’s a common trope about human testers being slow and error prone. Machinery is fast at mashing virtual keys on virtual keyboards. It’s infinitely slow at recognizing problems it hasn’t been programmed to recognize. It’s infinitely slow at describing problems unless it has been programmed to do so.

Machinery doesn’t eliminate human error; it reduces our tendency towards some kinds of errors and increases the tendency for other kinds.

Upon saving the script, the product presents an error dialog with no content. Then the product hangs with everything disabled, including OK button on the error dialog. (Bug 7.) The only onscreen control still available is the Close button.

After clicking the Close button and restarting the product, I find that all of my data has been lost. (Bug 8.)

Pause: my feelings and intuition are already suggesting that the recorder part of the product, at least, is unstable. I’ve not been pushing it very hard, nor for very long, but I’ve seen several bugs and one crash. I’ve lost the script that was supposedly being recorded.

In good testing, we think critically about our feelings, but we must take them seriously. In order to do that, we follow up on them.

Perhaps the product simply can’t handle something about the way Mattermost processes input. I have no reason to believe that Mattermost is exceptional. To test that idea, I try a very simple program, UI-wise: the Pattern exercise from our Rapid Software Testing class.

The Pattern program is a little puzzle implemented as a very simple Web page. The challenge for the tester is to determine and describe patterns of text strings that match a pattern encoded in the program. The user types input into a textbox element, and then presses Enter or clicks on the Submit button. The back end determines whether the input matches the pattern, and returns a result; then the front end logs the outcome.

I type three strings into the field, each followed by the Enter key. As the video here shows, the application receives the input and displays it. Then I type one more string into the field, and click on the submit button. Katalon Recorder fails to record all three of the strings that were submitted via the Enter key, losing all of the data! (Bug 9.)

Here’s a video recording of that experience:

The whole premise of a record-and-playback tool is to record user behaviour and play it back. Submitting Web form input via the Enter key is perfectly common and naturalistic user behaviour, and it doesn’t get recorded.

The workaround for this is for the tester to use the mouse to submit input. At that, though, Katalon Recorder will condition testers to interact with the product being tested in way that does not reflect real-world use.

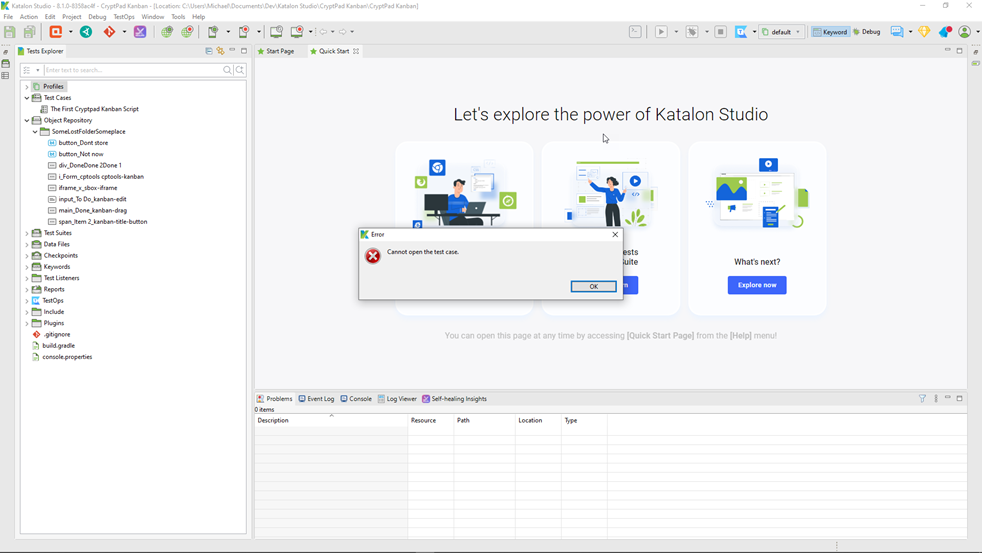

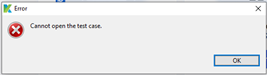

I saved the “test case”, and then closed Katalon Studio to do some other work. When I returned and tried to reopen the file, Katalon Studio posted a dialog “Cannot open the test case.” (Bug 10.)

To zoom that up…

No information is provided other than the statement “Cannot open the test case”. Oh well; at least it’s an improvement over Bug 7, in which the error dialog contained nothing at all.

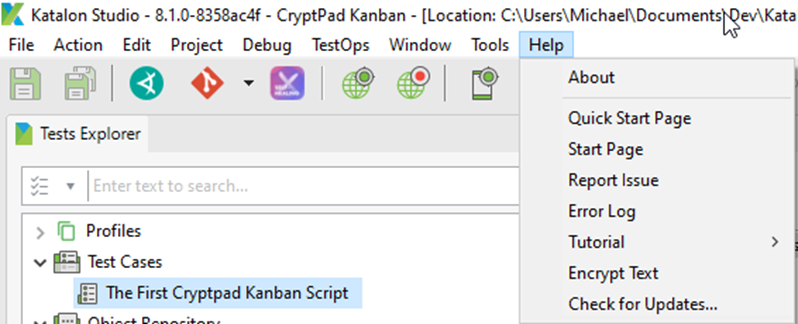

I was interested in troubleshooting the Enter key problem. There is no product-specific Help option under the Help menu. (Bug 11.)

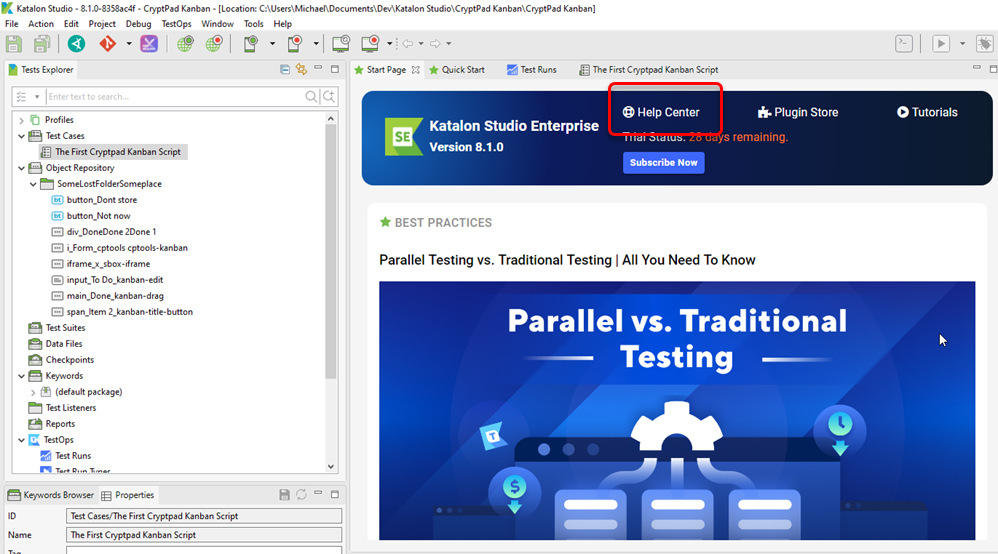

Clicking on “Start Page” produces a page in the main client window that offers “Help Center” as a link.

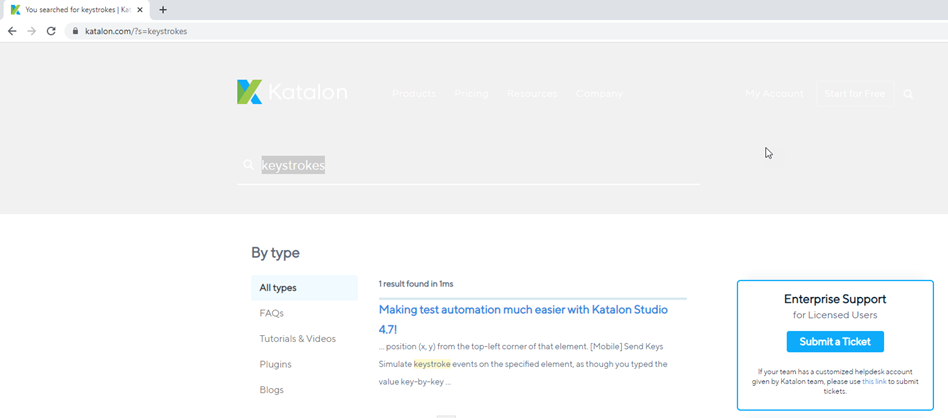

Clicking on that link doesn’t take me to documentation for this product, though. It takes me to the Katalon Help Center page. In the Help Center, I encounter a page where the search field looks like… white text on an almost-white background. (Bug 12.)

In the image, I’ve highlighted the search text (“keystrokes”). If I hadn’t done that, you’d hardly be able to see the text at all. Try reading the text next to the graphic of the stylized “K”.

I bring this sort of problem up in testing classes as the kind of problem that can be missed by checks of functional correctness. People often dismiss it as implausible, but… here it is. (Update, 2021/11/04: I do not observe this behaviour today.)

It takes some exploration to find actual help for the product (https://docs.katalon.com/katalon-studio/docs/overview.html). (Again, Bug 11.)

From there it takes more exploration to find the reference to the WebUI SendKeys function. When I get there, there’s an example, but not of appropriate syntax for sending the Enter key, and no listing of the supported keys and how to specify them. In general, the documentation seems pretty poor. (I have not recorded a specific bug for this.

This is part of The Secret Life of Automation. Products like Katalon are typically documented to the bare minimum, apparently on the assumption that the user of the product has the same tacit knowledge as the builders of the product. That tacit knowledge may get developed with effort and time, or the tester may simply decide to take workarounds (like preferring button clicks to keyboard actions, or vice versa) so that the checks can be run at all.

These products are touted as “easy to use”—and they often are if you use them in ways that follows the assumptions of the people who create them. If you deviate from the buiders’ preconceptions, though, or if your product isn’t just like the tool vendors’ sample apps, things start to get complicated in a hurry. The demo giveth, and the real world taketh away.

I turned the tool to record a session of simple actions with CryptPad Kanban (http://cryptpad.fr/kanban). I tried to enter a few kanban cards, and closed the recorder.

Playback stumbled on adding a new card, apparently because the ids for new card elements are dynamically generated.

At this point, Katalon’s “self=healing” functions began to kick in. Those functions are unsuccessful, and the process fails to complete. When I looked at the log output for the process, “self-healing” appears to consist of retrying an Xpath search for the added card over and over again.

To put it simply, “self-healing” doesn’t self-heal. (See Bug 13.)

The log output for the test case appears in a non-editable, non-copiable window, making it difficult to process and analyze. This is inconsistent with the facility available in the Help / About / System Configuration dialog, which allows copying and saving to a file. (See Bug 14.)

At this point, having spent an hour or so on testing, I stop.

Follow-up Work, November 3

I went to the location of katalon.exe and experimented with the command-line parameters.

As a matter of fact, no parameters to katalon.exe are documented; nor does the product respond to /?, -h, or –help. (See Bug 5.)

On startup the program creates a .metadata\.log file (no filename; just an extension) beneath the data folder. In that .log file I notice a number of messages that don’t look good; three instances of “Could not find element”; warnings for missing NLS messages; a warning to initialize the log4j system properly, and several messages related to key binding conflicts for several key combinations (“Keybinding conflicts occurred. They may interfere with normal accelerator operation.”). This bears further investigation some day.

Bug Summaries

Bug 1: There is no installation program and no instructions on where to put the product upon downloading it. Moreover, The installation guide at https://docs.katalon.com/katalon-studio/docs/getting-started.html#start-katalon-studio does not identify any particular location for the product. Inconsistent with usability, inconsistent with comparable products, inconsistent with installability, inconsistent with acceptable quality.

Bug 2: Product crashes when run from the \Program Files\Katlaon folder. This is due to Bug 1.

Bug 3: After the crash in Bug 2, the error dialog neither offers a way to to open the file directly nor provides a convenient way to copy the location. Inconsistent with usability.

Bug 4: Undocumented parameter -data to katalon.exe

Bug 5: Command-line help for katalon.exe does not list available command-line parameters.

Bug 6: Sloppy language in the “Creating Your First Script” introductory process: “First, save the captured elements that using in the script.” Inconsistent with image.

Bug 7: Hang upon saving my first script in the tutorial process, including an error dialog with no data whatsoever; only an OK button. Inconsistent with capability, inconsistent with reliability.

Bug 8: Loss of all recorded data for the session after closing the product after Bug 7. Inconsistent with reliability.

Bug 9: Katalon Studio’s recorder fails to record text input if it ends with an Enter key. The application under test accepts the Enter key fine. Inconsistent with purpose, inconsistent with usability.

Bug 10: Having saved a “test case” associated with Bug 9, closing the product, and then returning, Katalon Studio claims that it “cannot open the test case”. Inconsistent with reliability.

Bug 11: There is no product-specific “Help” entry under the main menu’s Help selection. Inconsistent with usability.

Bug 12: Search UI at katalon.com/s=keystrokes displays in white text on an almost-white background. Inconsistent with usability. (Possibly fixed at some point; on 2021/11/04, I did not observe this behaviour.)

Bug 13: “Self-healing” is ineffective, consisting of repeatedly trying the same Xpath-based approach to selecting an element that is not the same as the recorded one. Inconsistent with purpose.

Bug 14: Output data for a test case appears in a non-editable, non-copiable window, making it difficult to process and analyze. Inconsistent with usability, inconsistent with purpose. This is also inconsistent with the Help / About / System Configuration dialog, which allows both copying to the clipboard and saving to a file.

[…] Ex: Devlopsense – Katalon Studio […]

[…] Katalon Experience Report by Michael […]

[…] Ex: Devlopsense – Katalon Studio […]

[…] Report: Katalon Experience Report by Michael Bolton […]

I totally agree.

Katalon is very potential, however it’s full of bugs.

One of the really great features is the switching between (behind)code and more drag-and-drop view… (building of testcases)

However when you talk to support guys they say: please don’t do that. it causes all kinds of problems.

And so I noticed. The set get’s corrupt. It’s possible to repair it though… but with a couple of really weird chronological steps and that info is hard to get.

so… yeah.

I evaluated Katalon in the summer of 2023. I don’t recall it crashing on me and I believe I had a rep on the line during installation which is probably why I did not have an issue with installation. However, I did encounter issues with object naming during recording as similar objects with slightly different attributes were assigned tot eh same object name and overwrote the object identifying properties. I recall fixing this by changing the default object attribute to capture.