Warning: this is a long post.

Introduction

This is an experience report of attempting to perform a session of sympathetic survey and sanity testing, done in September 2021, with follow-up work October 13-15, 2021. The product being tested is mabl. My self-assigned charter was to perform survey testing of mabl, based on a performing a basic task with the product. The task was to automate a simple set of steps, using mabl’s Trainer and test runner mechanism.

I will include some meta-notes about the testing in indented text like this.

The general mission of survey testing is learning about the design, purposes, testability, and possibilities of the product. Survey testing tends to be spontaneous, open, playful, and relatively shallow. It provides a foundation for effective, efficient, deliberative, deep testing later on.

Sanity testing might also be called “smoke testing”, “quick testing”, or “build verification testing”. It’s brief, shallow testing to determine whether if the product is fit for deeper testing, or whether it has immediately obvious or dramatic problems.

The idea behind sympathetic testing is not to find bugs, but to exercise a product’s features in a relatively non-challenging way.

Summary

mabl’s Trainer and test runner show significant unreliability for recording and playback of very simple, basic tasks in Mattermost, a popular open-source Slack-like chat system with a Web client. I intended to survey other elements of mabl, but attempting these simple tasks (which took approximately three minutes and forty seconds to perform without mabl) triggered a torrent of bugs that has taken (so far) approximately ten hours to investigate and document to this degree.

There are many other bugs over which I stumbled that are not included in this report; the number of problems that I was encountering in this part of the product overwhelmed my ability to stay focused and organized.

A note on the term “bug”: in the Rapid Software Testing namespace, a bug is anything about the product that threatens its value to some person who matters. A little less formally, a bug is something that bugs someone who matters.

From this perspective, a bug is not necessarily a coding error, nor a “broken” feature. A bug is something that represents a problem for someone. Note also that “bug” is subjective; the mabl people could easily declare that something is not a bug on the implicit assumption that my perception of a bug doesn’t matter to them. However, I get to declare that what I see bugs me.

The bugs that I am reporting here are, in my opinion, serious problems for a testing tool—even one intended for shallow, repetitive, and mostly unhelpful rote checks. Many of the bugs considered alone would destroy the mabl’s usefulness to me, and would undermine the quality of my testing work. Yet these bugs are also very shallow; they were apparent in attempts to record, play back, and analyze a simple procedure, with no intention to provide difficult challenges to mabl’s Trainer and runner features.

It is my opinion that mabl itself has not been competently and thoroughly tested against products that would present a challenge to the Trainer or runner features; or if it has, its product management has either ignored or decided not to address the problems that I am reporting here.

I have not yet completed the initial charter of performing a systematic survey of these features. This is because my attempt to do was completely swamped by the effort required to record the bugs I was finding, and to record the additional bugs that I found while recording and investigating the initial bugs.

From one perspective, this could be seen as a deficiency in my testing. From another (and, I would argue, more reasonable) perspective, the experience that I have had so far would suggest at least two next steps if I were working for a client, depending on my client and my client’s purposes.

One next step might be to revisit the product and map out strategies for deeper testing. Another might be to decide that the survey cannot be completed efficiently right now and is not warranted until these problems are addressed. Of course, since I’m my own client here, I get to decide: I’m preparing a detailed report of bugs found in an attempt at sympathetic testing, and I’ll leave it at that.

mabl claims to “Improve reliability and reduce maintenance with the help of AI powered test automation”. This claim might bear investigation.

What is being used as training sets for the models, and where does the data come from? Is my data being used to train machine learning models for the applications I’m testing? If it’s only mine, is the data set large enough? Or is my data being used to develop ML models for other people’s applications? If “AI” is being used to find bugs or for “self-healing”, how does the “AI” comprehend the difference between “problem” and “no problem? And is the “AI” component being tested critically and thoroughly? These are matters for another day.

Setup and Platform

On its web site, mabl claims to provide “intelligent test automation for Agile teams”. The company also claims that you can “easily create reliable end-to-end tests that improve application quality without slowing you down.”

The claim about improving application quality is in trouble from the get-go. Neither testing nor tests improve application quality. Testing may reveal aspects of application quality, but until someone does something in response to the test report, application quality stays exactly where it is. As for the ease of creating tests… well, that’s what this report is about.

I registered an account to start a free trial, and downloaded v 1.2.2 of the product. (Between September 19 and October 13, the installer indicated that the product had been updated to version 1.3.5. Some of these notes refer to my first round of testing. Others represent updates as I revisited the product and its site to reproduce the problems I found, and to prepare this report. If the results between the two versions differ, I will point out the differences.)

I ran these tests on a Windows 10 system, using only Chrome as the browser. As of this writing, I am using Chome v.94

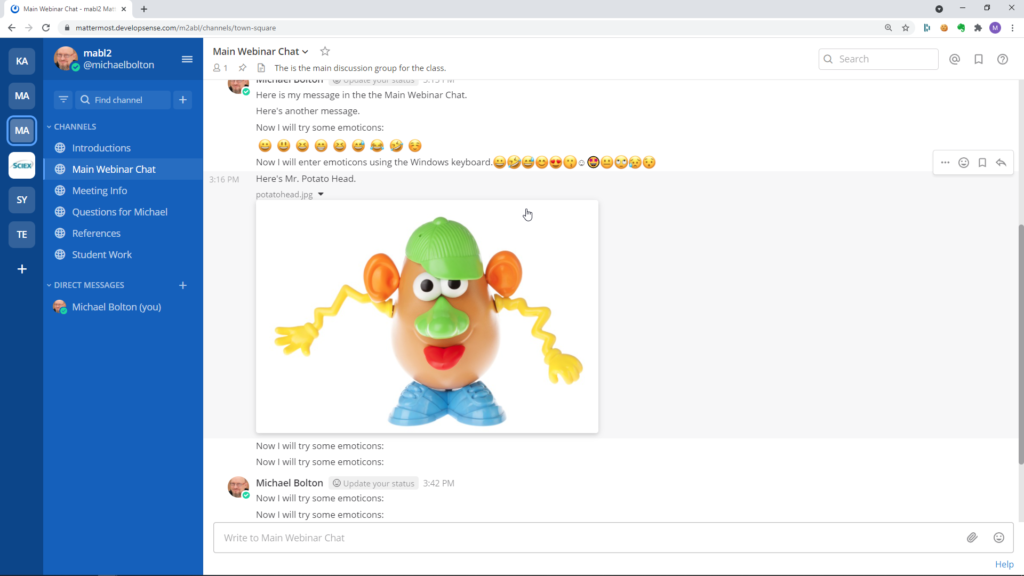

To play the role of product under test, I chose Mattermost. Mattermost is an open-source online communication tool, similar to Slack, that we use in our Rapid Software Testing classes, and provides both a desktop and a web-based client. Like Slack, you can be a member of different Mattermost “teams”, so I set up a team with a number of channels specifically for my work with mabl.

Testing Notes

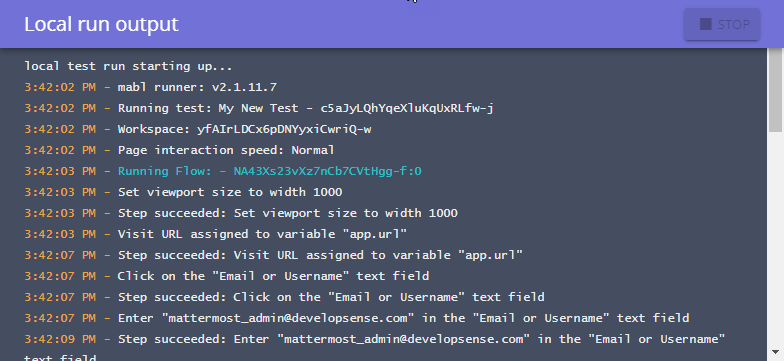

I started the mabl trainer, and chose to begin a Web test, in mabl’s parlance. (A test is more than a series of recorded actions.) mabl launched a browser window that defaulted to 1000 pixels. I navigated to the Mattermost Web client. I entered a couple of lines of ordinary plain text, which was accepted by Mattermost, and which the mabl Trainer appeared to record.

I then entered some emoticons using Mattermost’s pop-up interface; the mabl Trainer appeared to record these, too. I used the Windows on-screen keyboard to enter some more emoticons.

Then I chose a graphic, uploaded it, and provided some accompanying text that appears just above the graphic.

I’m getting older, and I work far enough away from my monitors that I like my browser windows big, so that I can see what’s going on. Before ending the recording, I experimented a little with resizing the browser window. In my first few runs with v 1.2.2, this caused a major freakout for the trainer, which repeatedly flashed a popup that said “Maximizing Trainer” and looped endlessly until I terminated mabl.

(In version 1.3.5, it was possible to try to maximize the browswer window, but the training window stubbornly appeared to the right of the browser, even if I tried to drag it to another screen.) (See Bugs 1 and 2 below.)

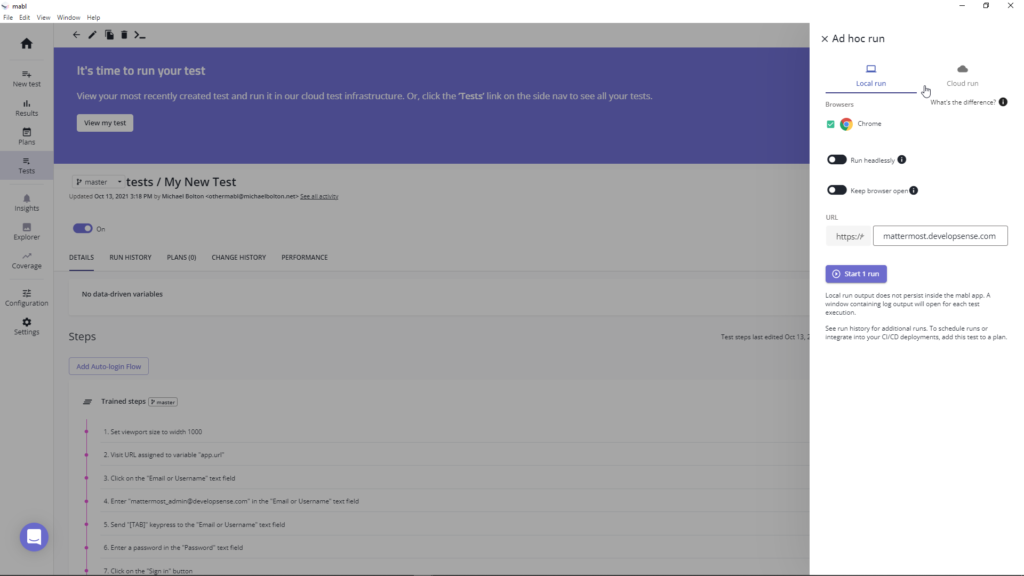

I pressed “Close” on the Trainer window, and mabl prompted me to run the test. I chose Local Run, and pressed the “Start 1 run” button at the bottom of the “Ad hoc run” panel.

A “Local Run Output” window appeared. mabl launched the browser in a way that covered the Local Run Window; an annoyance. mabl appeared to log into Mattermost successfully. The tool simulated a click on the appropriate team, and landed in that team at the top of the default channel. This is odd, because normally, Mattermost takes me to the end of the default channel. And then… nothing seemed to happen.

Whatever mabl was doing was happening below the level of visible input. (Later investigation shows that mabl, silently and by default, sets the height of the viewport much larger than the height of the browswer window and the height of my screen.) (See Bug 3 below.)

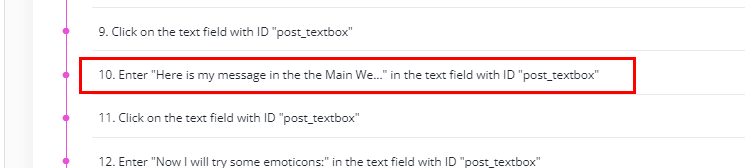

When I looked at the Mattermost instance in another window, it was apparent that mabl had failed to enter the first line of text that I had entered, even though the step is clearly listed:

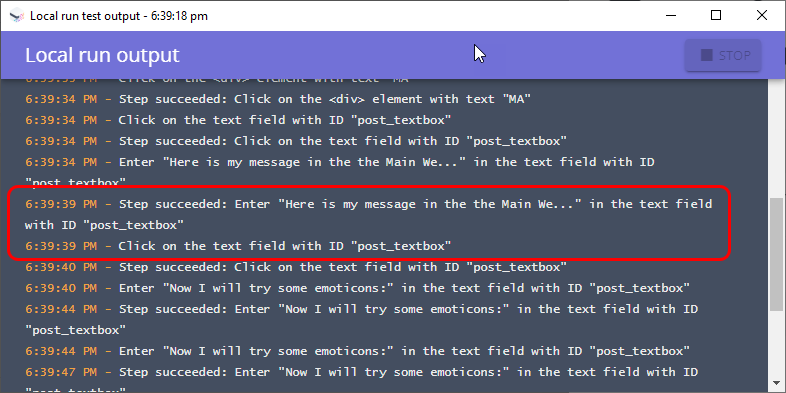

Yet the Local run output window suggested that the text had been entered successfully,

mabl failed to enter most subsequent text entries, too. Upon investigation, it appears that the runner does type the body of the recorded text into the textbox element. After that, though, either the mabl Trainer does not record the Enter key to send the message, or the runner doesn’t simulate the playback of that key.

The consequence is that when it comes time to enter another line of text, mabl simply replaces the contents of the textbox element with the new line of text, and the previous line is lost.

Ending entries in this text element with Ctrl-Enter provides a workaroud to this behaviour, but that’s not the normal means for submitting a post in Mattermost. The Enter key on its own should do the trick.

More investigation revealed that this behaviour is the same whether the procedure is run locally or in the cloud. (See Bug 4 below.)

Many record-and-playback tools claim to simulate user behaviour. It is crucial to remember that human users enter data in one way—via mechanisms like keyboards, mice, touch pads, drawing tablets, etc.—and almost all playback tools use different means, in the form of software interfaces. The differences between input mechanisms are often ignored, but they can be significant.

Moreover, different playback tools use different approaches to simulate user input. Often these approaches throw away elements of user behaviours such as backspacing, pasting, copying, or deleting blocks of text, and submit only the edited string for processing. Such simulations will systematically miss problems that happen in real usage.

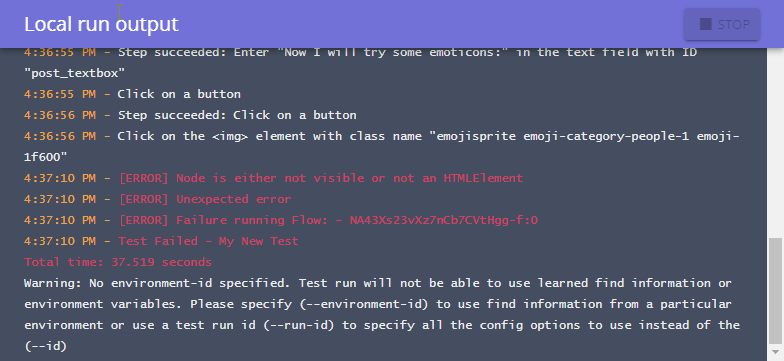

Upon trying to play back the typing of the emojis, mabl apparently became confused by Mattermost’s emoji popup. The log indicated that the application attempted to locate a specific element three times, then concluded that the element could not be found, whereupon the entire procedure errored out for good. The controls that mabl is seeking according to the recorded steps in the test are plainly accessible via the developer tools. All this seems inconsistent with mabl’s claims of “auto-healing”. (See Bugs 5 and 6 below.)

In these screenshots, some timestamps in the logs may appear out of sequence relative to this narrative. Some screenshots you’re seeing here are of repro instances, rather what happened the first time through. This is because I was encountering so many bugs while testing that my capacity to record them properly became overwhelmed, and I had to return for analysis later.

The phenomenon of being swamped (or swarmed) by bugs like this is something we call a bug cascade in Rapid Software Testing. In my rough notes for my first run of this session, I observe “I should have been recording a video of all this.” It can be very useful to have a narrated video recording for later review.

I examined the “Local run output” window more closely and observed a number of problems. On this run and others, the listing claims to have entered text successfully when that text never appears in the application under test. Only the first 37 characters of the text entered by the runner appears in the log.

The local log contains time stamps, but not date stamps, and those time stamps are recorded in AM/PM format. Both of these are inconvenient for analysing the log files with tool support. There appears to be no mechanism for saving a file from the Local Run Output window. (See Bugs 7, 8, 9, 10, and 11 below.)

I looked for a means of looking at past runs from the desktop in various places in the mabl desktop client. I could not find one. (See Bug 12 below.)

Using Everything (a very useful tool that affords more or less instaneous search for all files on the system) I also looked for log files associated with individual test runs. I could not find any.

Everything (the product) quickly helped me to find mabl’s own application log (mablApp.log), and this did contain some data about the steps. Oddly, the runner data in mablApp.log is formatted in a more useful way than in the “Local run output” window. Local runs did not seem to record screen shots, either.

This was all pretty confusing at first, but later research revealed this: “Note that test artifacts – such as DOM snapshots or screenshots – are not captured from local runs. The mabl execution logs and final result are displayed in a separate window.” (https://help.mabl.com/docs/local-runs-in-the-desktop-app) That might be bad enough—but no run-specific local logs at all?

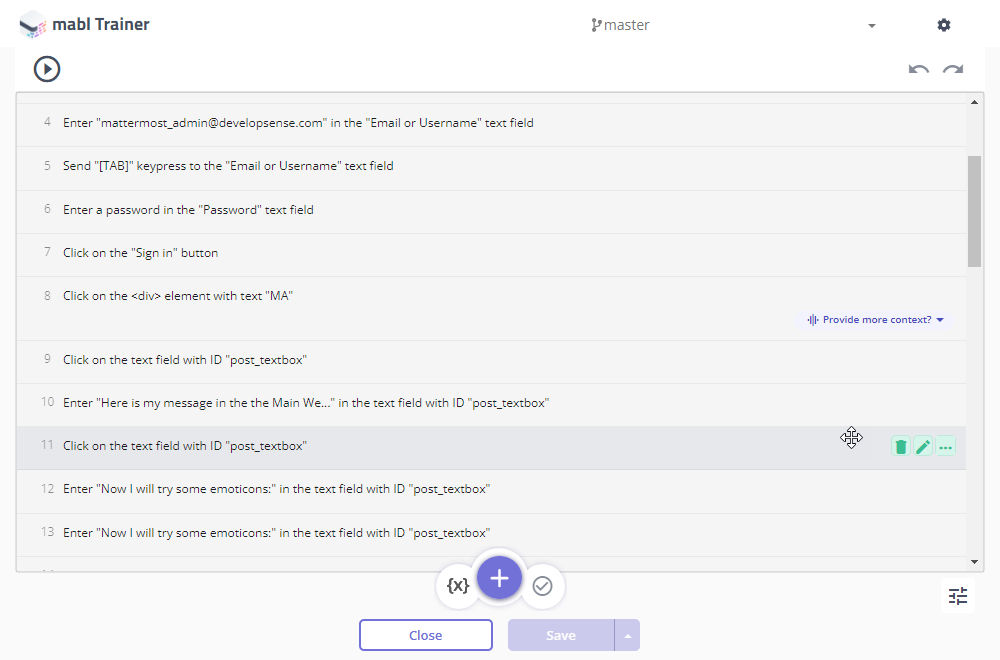

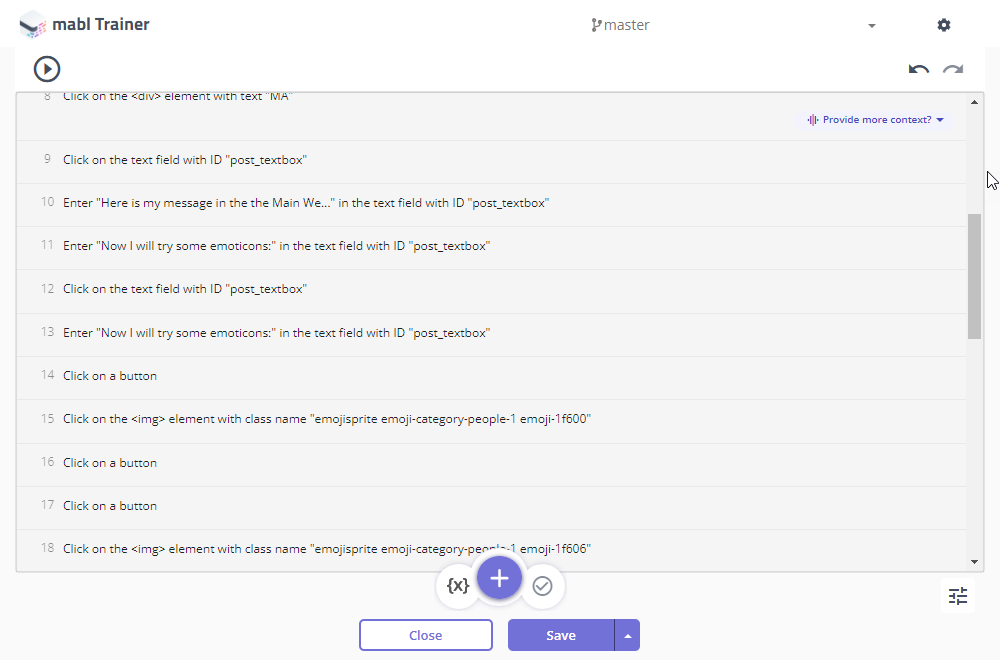

In order to try to troubleshoot the problems that I was experiencing with entering text, I looked at the Tests listings, and chose My New Test. This took me to an untitled window that lists the steps for the test (I will call this the “Test Steps View”).

Scanning the list of steps, I observed the second step was “Visit URL assigned to variable ‘app.url’.” This is factually correct, but unhelpful; how does one find the value of the variable? There is no indication of what that URL might be or how to find it conveniently. Indeed, the screen suggests that there are “no data-driven variables”—which seems false.

(Later investigation revealed that if I chose Edit Steps, then chose Quick Edit, then chose the URL to train against, and then chose the step “Visit URL assigned to variable ‘app.url'”, I could see a preview of the value. How about a display of the variable in the steps listing? A tooltip?) (See Bug 13 below.)

I examined the step that appeared to be failing to enter text. The text that I originally typed into the Mattermost window was not displayed in full, even though there’s plenty of space available for it in the window for the Test Steps View. (See Bug 14 below.) This behaviour is inconsistent with my ability to explain it, and it’s inconsistent with an implicit purpose of test steps view—(the ability to troubleshoot test steps easily). However, it is consistent with the display in the Log output window logging in mabl’s system log. (See Bug 15 below.)

As I noted above, further experimentation with Mattermost and with the mabl trainer showed that ending the input with Ctrl-Enter (rather than Mattermost’s default Enter) while recording allowed mabl to play back the text suggest. So, perhaps if I could edit the text entry step somehow, or if I could add a keystroke step, there would be a workaround for this problem if I’m willing to accept the risk that behaviours developed with the Trainer are inconsistent with the actual behaviours of the user.

In the Test Steps View, there is an “Edit Steps” dropdown, with the options “Quick Edit” and “Launch Trainer”. I clicked on Quick Edit, and a pop-up appeared immediately, confusingly, and probably unnecessarily: “Launching Trainer”.

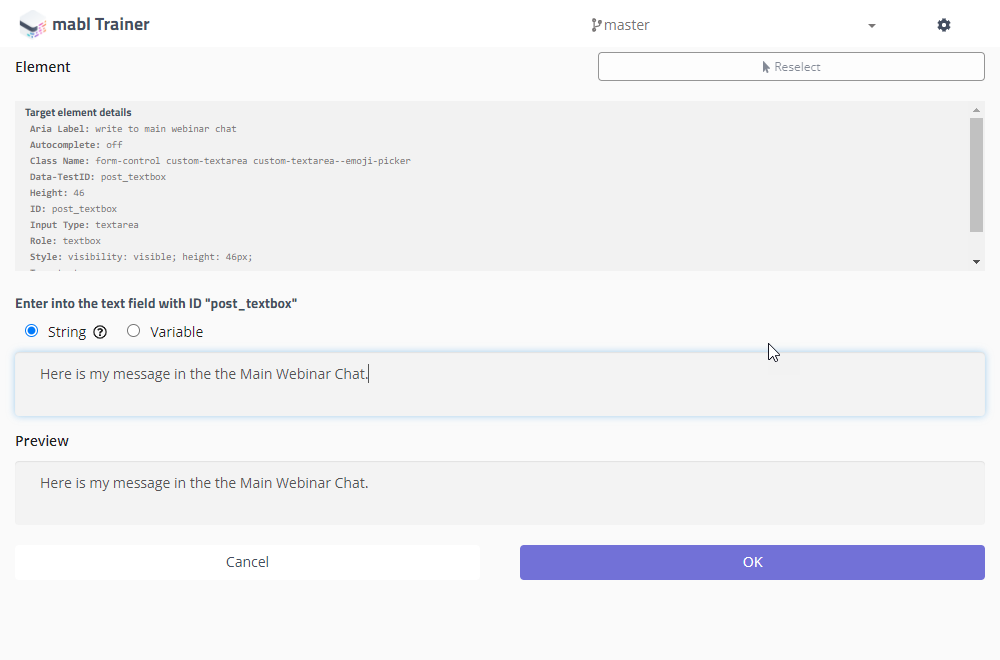

I selected the text entry step, hoping to edit some of the actions within it. Of the items that appear in the image below, note that only the input text can be edited; no other aspect of the step can be. (See Bug 16 below.)

It’s possible to send keypresses to a specific element. That capability has supposedly been available in mabl for a long time as claimed here. Could I add an escape sequence to the text by which I could enter a specific key or key combination? If such a feature is available, it’s not documented clearly. The documentation hints that certain keys might have escape strings—”[TAB]”, or “[ENTER]”. However, adding those strings to the end of the text doesn’t make the virtual keypress happen. (See Bug 17 below.)

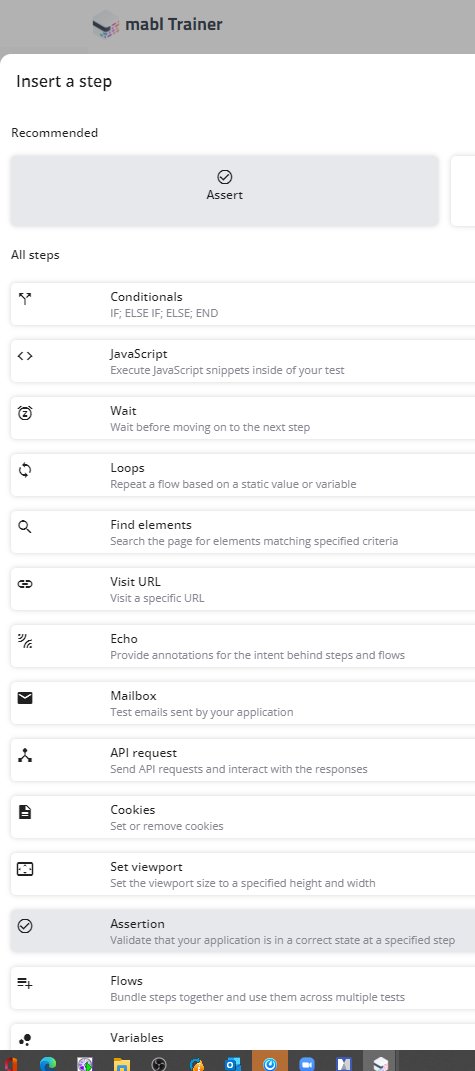

The Quick Edit window offers the opportunity to insert a step. What if I try that? I scroll down to the step that enters the text, attempt to select that step with the mouse, and press the plus key at the bottom to insert the step. A dialog appears that offers a set of possible steps. Neither entering text, nor entering keystrokes, nor clicking the mouse appears on this list. (See Bug 18 below.)

(For those wondering if input features appear beneath the visible window, “Variables” is the last item in the list.)

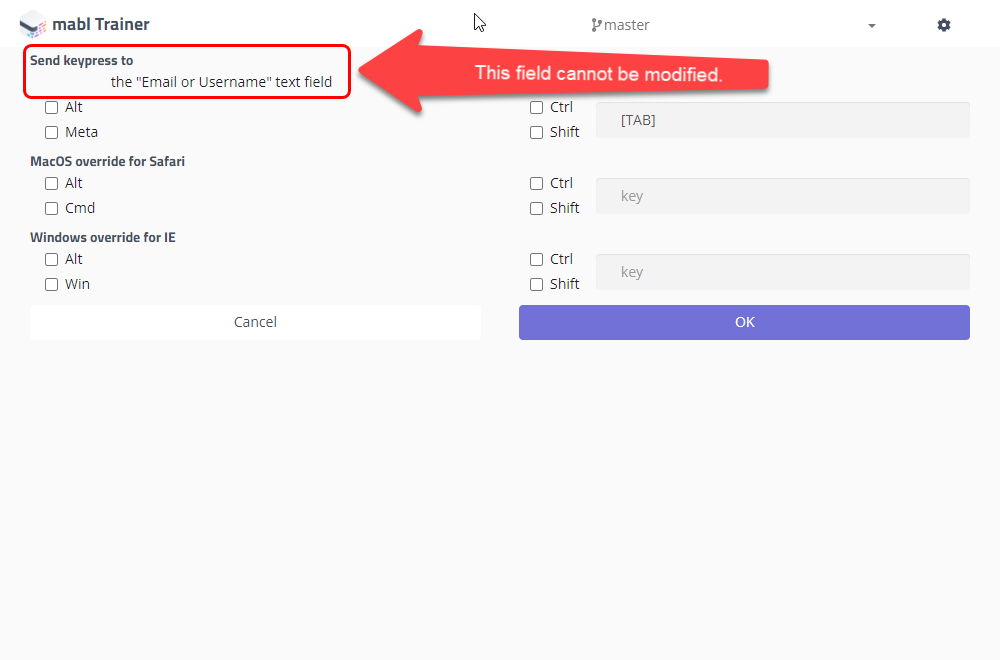

When I look at Step 5 in the Test Steps View, I see that there’s a step that sends a Tab keypress to the “Email or Username” text field. Maybe I could duplicate that step, and drag it down to the point after my text entry. Then maybe I could modify the step to point to the post_textbox element, and to send the Enter key instead of the Tab key.

Yes, I can change [TAB] to [ENTER]. But I can’t change the destination element. (See Bug 19 below.)

Documenting this is difficult and frustrating. Each means of trying to send that damned Enter key is thwarted in some exasperating and inexplicable way. For those of you of a certain age, it’s like the Cone of Silence from Get Smart (the younger folks can look it up on the Web). I’m astonished by the incapability of the product, and because of that I second-guess myself and repeat my actions over and over again to make absolutely sure I’m not missing some obvious way to accomplish my goal. The strength of my feelings at this time are pointers to the significance of the problems I’m encountering.

I looked at some of the other steps displayed in the Test Steps View and in the Trainer. Note that steps are often described as “Click on a button” without displaying which button is being referred to, unless that button has an explicit text label. This is annoying, since human-readable information (like the aria-label attribute) is available, but I had to click on “edit” to see it. (See Bug 20 below.)

Scanning the rest of the Test Steps View, I noticed an option to download a comma-separated-value (.CSV) file; perhaps that can be viewed and edited, and then uploaded somehow. I downloaded a .CSV and looked at it. It is consistent with what is displayed in the Test Steps View, but it does not accurately reflect the behaviour that mabl is trying to perform.

Once again, the text that mabl actually tries to enter in a text field (which can be observed if you scroll to the bottom of the browser window in the middle of the test) is elided, limited to 37 characters plus an ellipsis. (See Bug 21 below.)

This would be a more serious problem if I tried to edit the script and upload it. However, no worries there, because even though you can download a .CSV file of test steps, you can’t upload one. There’s nothing in the product’s UI, and a search of the Help file for “upload” revealed no means for uploading test step files. (See Bug 22 below.)

At this point, I began to give up on entering text naturalistically and reliably using the Trainer. I wondered if there was anything that I could rescue from my original task of entering text, throwing in some emojis, and uploading a file. I edited out the test steps over which mabl stumbled in order to get to the file upload. The test proceeded, but the file didn’t get uploaded. Perhaps this is because the Trainer doesn’t keep track of where the file came from on the local system. (See Bug 23 below.)

At this point, my energy for continuing with this report is flagging. Investigating and reporting bugs takes time, and when there are this many problems, it’s disheartening and exhausting. I’m also worried about this getting boring to read. This post probably still has several typos. I have left many bugs that I encountered out of the narrative here, but a handful of them appear below. I left many more undocumented and uninvestigated. (See Bugs 24, 25, 26, and 27 below.)

There is much more to mabl. Those aspects may be wonderful, or terrible. I don’t know, because I have not examined them in any detail, but I have strong suspicion of lots of further trouble. Here’s an example:

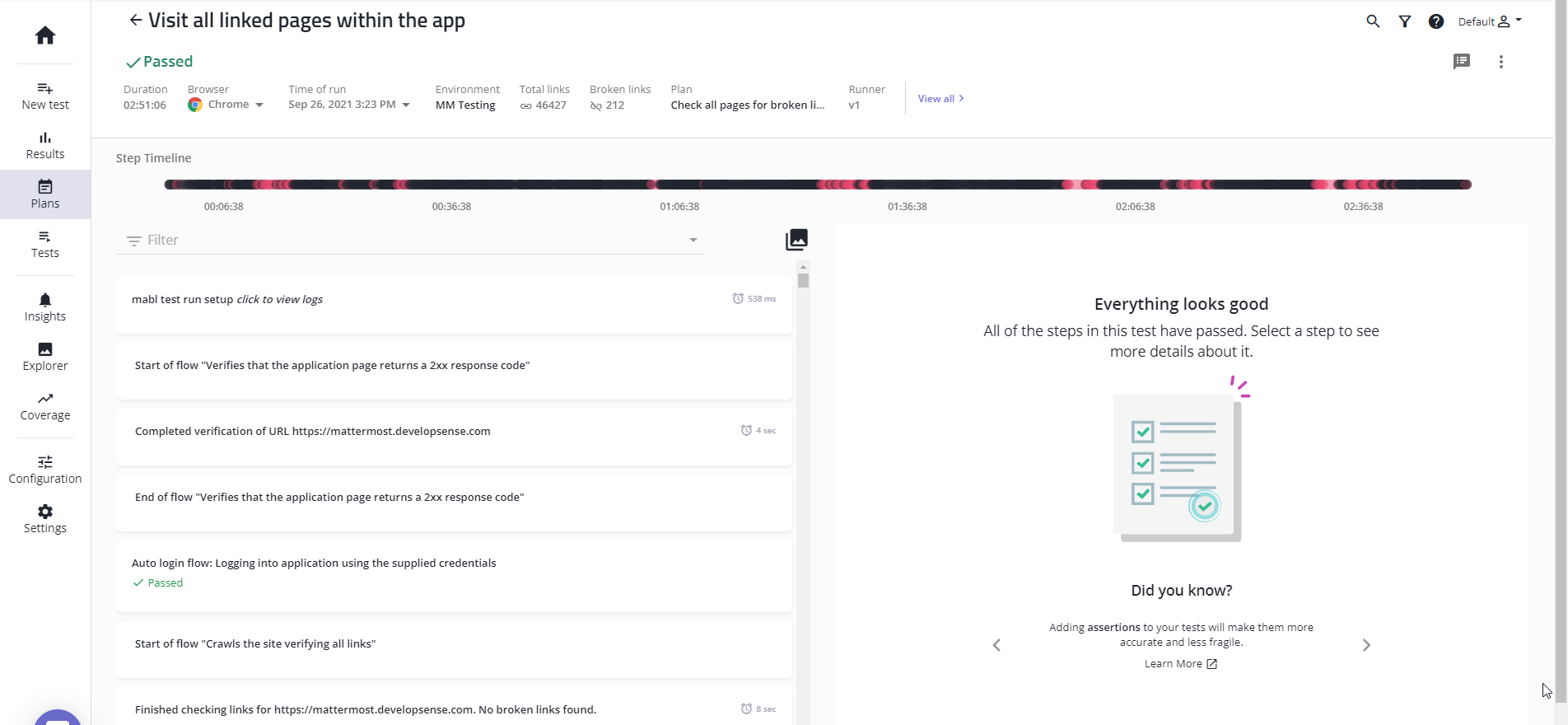

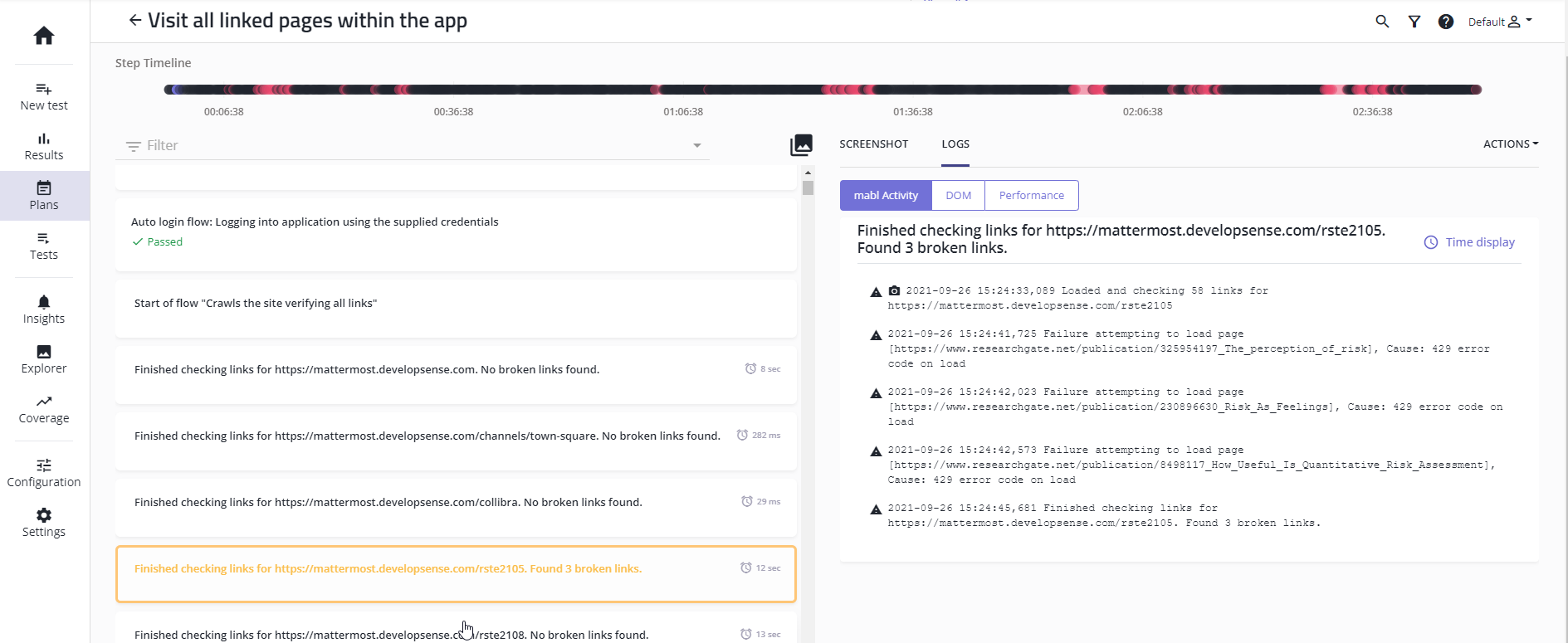

In my initial round of testing in September, I created a plan—essentially a suite of recorded procedures and tasks that mabl offers. That plan included crawling the entire Mattermost instance for broken links. mabls’s summary report indicated that everything had passed, and that there were no broken links. “Everything looks good!”

I scrolled down a bit, though, and looked at the individual items below. Upon seeing “Found 3 broken links” on the left, and saw the details on the right.

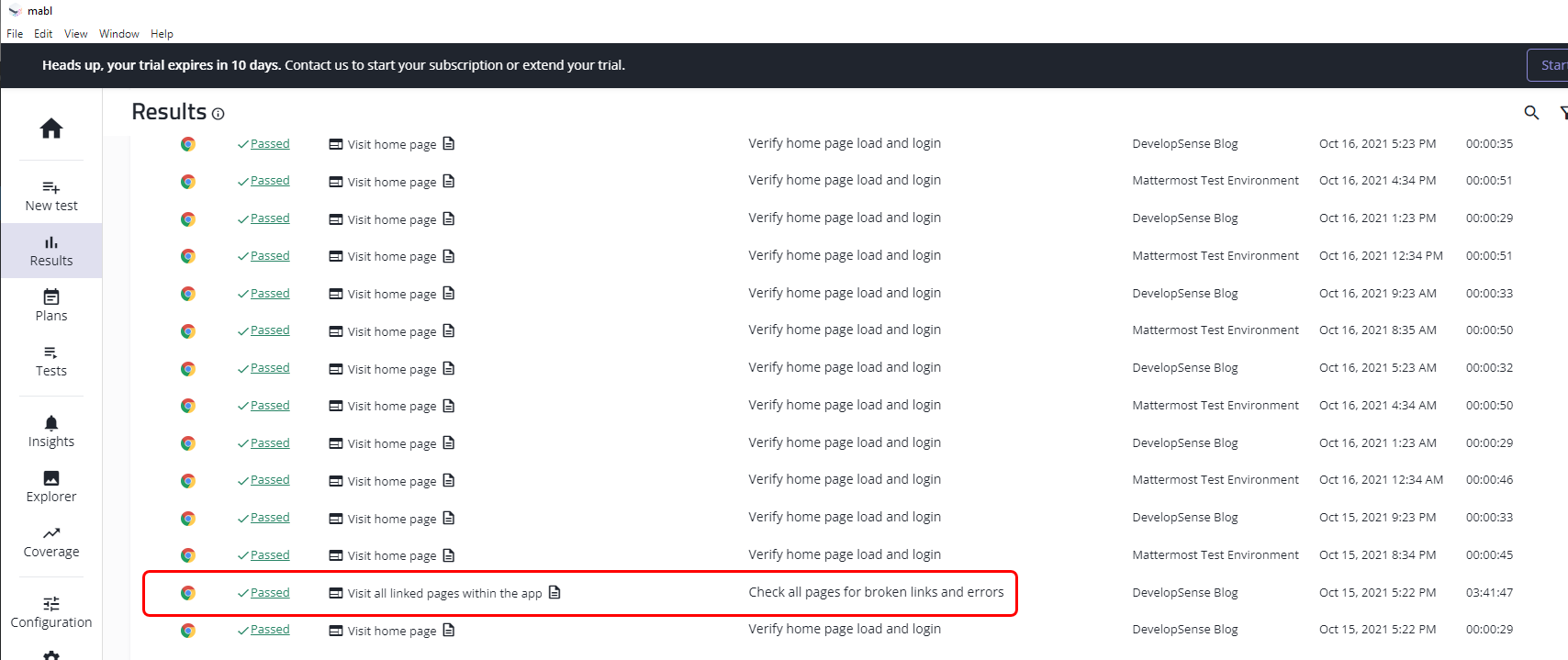

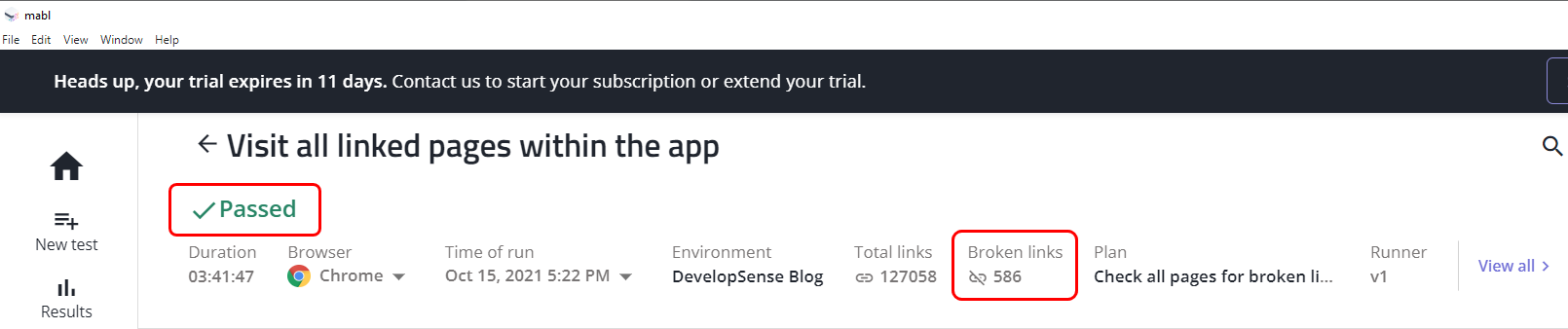

In the October 13-15 test activity, I set up a task for mabl to crawl my blog looking for broken links. Thanks to various forms of bitrot (links that have moved or otherwise become obsolete, commenters whose web sites have gone defunct, etc.), there are lots of broken links. mabl reports that everything passed.

This looks fine until you look at the details. mabl identified 586 broken links (many of them are duplicates)… and yet the summary says “Visit all linked pages within the app” passed. (See Bug 28 below.)

Epilogue

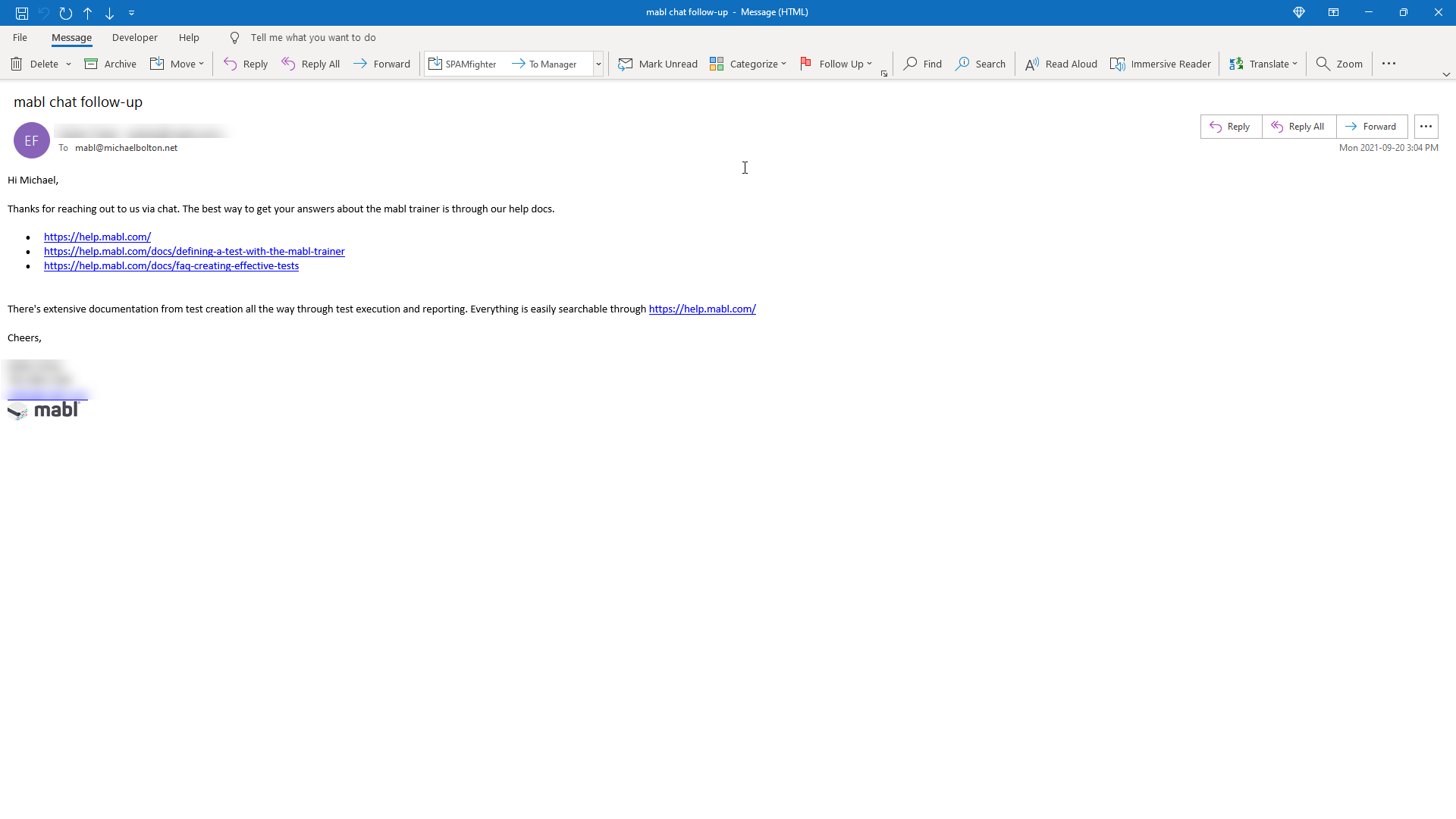

During my first round of testing in September, I contacted mabl support via chat, and informed the representative that I was encountering problems with the product while preparing a talk. The representative on the chat promised to have someone contact me about the problems. The next day, I received this email:

Let me zoom that up for you:

And that, so it seems, is what passes for a response: RTFM.

Bug Summaries

Bug 1: Resizing the browser while training resulted in an endless loop that hangs the product. (Observed several times in 1.2.2; not observed so far in 1.3.5.)

Bug 2: The browser cannot be resized to the full size of the screen on which the training is happening; and at the same time, the trainer window cannot be repositioned onto another screen. (This was happening in 1.2.2 when resizing didn’t result in the endless loop above; it still happens in 1.3.5.) This is inconsistent with usability and inconsistent with comparable products; if the product is intended to replicate the experience of a user, it’s also inconsistent with purpose.

Bug 3: The default behaviour of the application running in the browser is different from the naturalistic encounter with the product and, as such, in this case, rendered input activity invisible unless I actively scrolled the browser window using the cursor keys, and until I figured out where the browser height was set. Inconsistent with usability for a test tool at first encounter; inconsistent with charisma.

Bug 4: mabl’s playback function doesn’t play back simple text entry into a Mattermost instance, but the logging claims that the text was entered correctly. This happens irrespective of whether the procedure is run from the cloud or from the local machine. This is inconsistent with comparable products; inconsistent with purpose; inconsistent with basic capabilities for a product of this nature; and inconsistent with claims (https://help.mabl.com/changelog/initial-keypress-support-in-the-mabl-trainer).

Bug 5: mabl seems unable to locate emojis in Mattermost’s emoji popup—something that a human tester would have no problem with—even though the Trainer supposedly captured the action. (Inconsistency with purpose.)

Bug 6: Auto-healing fail with respect to trying to locate buttons in the Mattermost emoji picker. (Inconsistency with claims.)

Bug 7: The “Local run output” window falsely suggests that attempts to enter text are successful when the text entry has not completed. (Inconsistent with basic functionality; inconsistent with purpose.)

Bug 8: The “Local run output” window does not record the actual text that was entered by the runner. Only the first 37 characters of the entry, followed by an ellipsis (“…”) are displayed. (Inconsistent with usability for a test tool.)

Bug 9: Date stamps are absent in the logging information displayed in the “Local run output” window. Only time stamps appear, and at that only precise down to the second. This is an inconvenience for analyzing logged results over several days. (Inconsistent with usability for testing purposes; also inconsistent with product (mabl’s own application log).)

Bug 10: Time stamps in the “Local run output” window are rendeded in AM/PM format, which makes sorting and searching via machinery less convenient. (Inconsistent with testability; also poor internationalization; and also inconsistent with mabl’s own application log.)

Bug 11: Cannot save data to a file directly from the “Local run output” window. (Inconsistent with purpose; inconsistent with usability; risk of data loss.) Workarounds: copying data from the log and pasting it into the user’s own record; spelunking through mabl’s mablApp.log file.

Bug 12: Local run log output does not appear in mabl’s GUI, neither under Results nor under the Run History tab for individual tests. If there is a facility for that available from the GUI, it’s very well hidden. (Inconsistent with usability for a record/playback testing tool.) Workaround: there is some data available in the general application log for the product, but it would require effort to be distentangled from the rest of the log entries.

Bug 13: The test steps editing window makes it harder than necessary to view the content of variables that will be used for the test procedure. For instance, the user must choose Edit Steps, then chose Quick Edit, then chose the URL to train against, and then chose the step “Visit URL assigned to variable ‘app.url’.

Bug 14: The main test editor window hides the content of text entry strings longer than about 40 characters. Since there is ample empty whitespace to the right, it is unclear why longer string of text aren’t displayed. Inconsistent with explainability, inconsistent with purpose (the ability to troubleshoot test steps easily).

Bug 15: mabl’s application log (mablApp.log) limits the total length of the typed string to 40 characters (37 characters, plus an ellipsis (…)). (Is the Local Output Log generated from the mablApp.log?)

Bug 16: In a step to enter text in Quick Edit mode, only the input text can be edited; no other aspect—neither the target nor the action of the step can be edited.

Bug 17: Escape sequences to send specific keys (e.g. Tab, Enter) are not supported by mabl’s Quick Edit step editor. Inconsistent with comparable products, inconsistent with purpose.

Bug 18: The “Insert Steps” option in the Quick Edit dialog does not offer options for entering text, sending keys, or clicking on elements. Inconsistent with purpose; inconsistent with comparable products.

Bug 19: The “Send keypress” dialog allows changing the key to be sent, or to add modifier keys, but doesn’t allow changing the element to which the key is sent.

Bug 20: The trainer window fails to identify which button is to be clicked in a step unless the button has a text label. Some useful information (e.g. the Aria Label or class ID) to identify the button is available if you enter the step and try to edit it. (Inconsistent with product; inconsistent with purpose)

Bug 21: The .CSV file identifying the steps for a test does not reflect the actual steps performed. (Inconsistent with product; inconsistent with the purpose of trying to see the actual steps in the procedure.) Workaround: going into each step in the Quick Edit or Trainer views displays the entire text, but for long procedures with strings longer than 40 characters, this could be very expensive in terms of time.

Bug 22: You can’t upload a CSV of test steps at all. Editing test steps depends on mabl’s highly limited Trainer or Quick Edit facilities—and Quick Edit depends on the Trainer. The purpose of downloaded CSV step files is unclear.

Bug 23: A file upload recorded through the Trainer / Runner mechanism never happens.

Bug 24: The Help/Get Logs for Support option isn’t set by default to go to the folder where mabl’s logs are stored. Instead, it opens up a normal File/Open window (in my case defaulting to the Downloads folder, perhaps because this is the most recent location where I opened my browser, or…)

Bug 25: The mabl menu View / Zoom In function claims to be mapped to the Ctrl-+. It isn’t. The Zoom Out (Ctrl–) and Actual Size (Ctrl-0) work.

Bug 26: I noticed on October 17 that an update was available. There is no indication that release notes are available or what has changed. When I do a Web search for mabl release notes, such release notes as exist are don’t refer to version numbers!

Bug 27: The mabl Trainer window doesn’t have controls typically found in the upper right of a Windows dialog, which makes resizing the window difficult and makes minimizing it impossible. (Inconsistent with comparable products; inconsistent with UI standards.)

Bug 28: mabl’s Results table falsely suggests that a check for broken links “passed”, when hundreds of broken links were found. (Inconsistent with comparable products; inconsistent with UI standards.)

I thank early readers Djuka Selendic, Jon Beller, and Linda Paustian for spotting problems in this post and bringing them to my attention. Testers help other people look good!

You may also like to peruse the next item in this series, Experience Report: Katalon Studio.

Extra s in Mabl:

“mabls’s summary report indicated that everything had passed”

I’m providing editing here because I am currently running a POC with MABL and using your notes for my own review – thank you for documenting what you ran into.

My pleasure. Or at least, my dubious pleasure. I’m happy if it was helpful.

Did you mean MORE instead of NO MORE in the parentheses section?

“I started the mabl trainer, and chose to begin a Web test, in mabl’s parlance. (A test is more than a series of recorded actions.)”

It seems I did, and it seems I fixed it. On reflection though, there’s another way of punctuating the original text. Im mabl’s parlance a test really is no more than a series of recorded actions.