In the previous post, I described ChatGPT as being a generator of bullshit. Some might say that’s unfair to ChatGPT, because bullshit is “speech intended to persuade without regard for truth”. ChatGPT, being neither more nor less than code, has no intentions of its own; nor does it have a concept of truth, never mind regard for it, and therefore can’t be held respsonsible for the text that it produces.

I’d reply that fairness to ChatGPT isn’t at issue. ChatGPT won’t worry about being treated unfairly. It doesn’t care; it doesn’t have feelings. People who are invested in promoting ChatGPT and other large language models might get upset at the idea that ChatGPT is a bullshitter. I’d say that’s their problem, but generating text without regard for the truth is exactly what ChatGPT does. That’s a fact; live with it, and live with its consequences.

A much bigger deal, to me, is our part in the exchange: whether we want to accept bullshit credulously or skeptically. If we’re appropiately skeptical, the risk associcated with bullshit is much lower.

it is fair to say that you might get good ideas from anywhere, even from a bullshitter, and even from bullshit. A drunk can make an insightful remark in a besotted stream of consciousness. A child’s naïveté might help us to see the world with fresh eyes.There might be a gold ring in the manure pile. Maybe there’s no gold ring but there’s a useful nail or two — in which case you might want to ask how much manure you want to sift before you head down to the hardware store and buy some nails.

In any case, the key is to recognize the risk that what you’re reading from ChatGPT is untrue, and to evaluate it applying judgement and skill.

Here’s a recent example. For an upcoming class, James Bach and I were recently considering how we might develop apply tools to aid in the testing of the Hemingway Editor. This is a single-page application that evaluates the style and readability of text. Have a look!

I whimsically suggested that we might create a tool to send prompts at superhuman speed to ChatGPT to generate text that provided examples of text that fit specific patterns that Hemingway analyzes. James initially dismissed the idea, but it did prompt us to look at what might happen if we used ChatGPT for its actual purpose: to generate text that didn’t need to be trusted. So, we went to Skype, and gave Bing/ChatGPT a try.

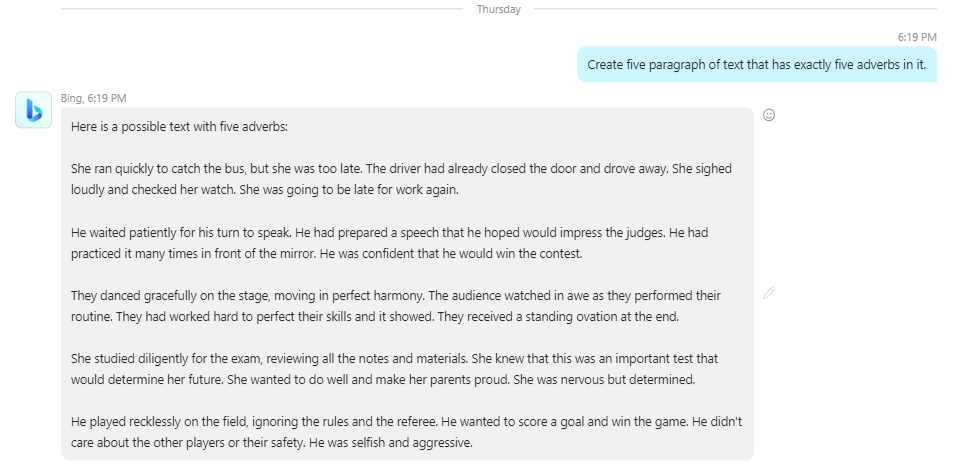

Hmmm. ChatGPT seems to have created five paragraphs of text, each of which has five adverbs. One would think that a socially and linguistically competent human would create five paragraphs of text with a total of five adverbs. But I also made a typo myself, “paragraph” instead of “paragraphs”. So let’s put it down to a misunderstanding, give ChatGPT the benefit of the doubt, and try again.

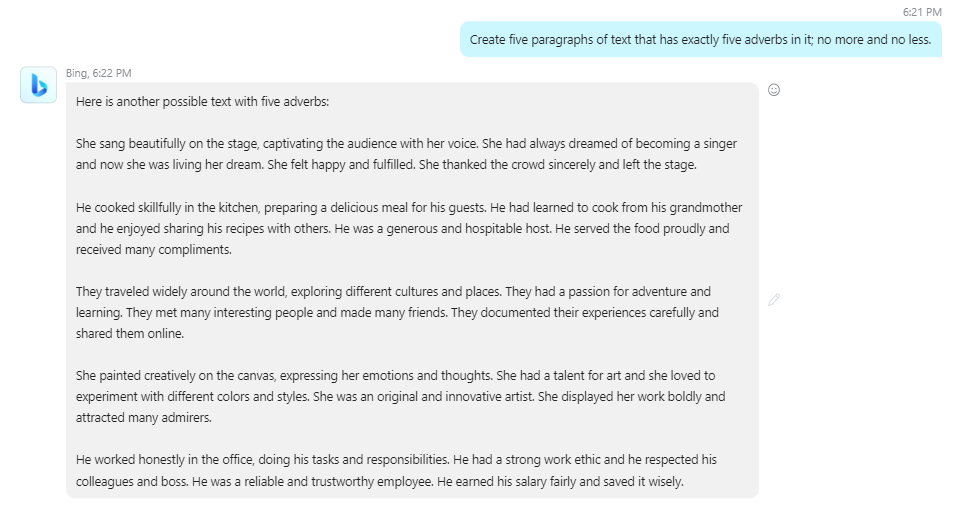

This time, I quickly counted 14 adverbs. The Hemingway app counted 11, for the record. It seems to consider only words that end in “ly” as adverbs and it doesn’t have a list of non-ly adverbs. Memo to the Hemingway people: this is a pretty weak heuristic for implementing an adjective-counting algorithm.

As a check on my own count, I asked ChatGPT how many adverbs there were in the text it had generated. It replied:

“There are 20 adverbs in this text. They are: beautifully, always, now, happy, fulfilled, sincerely, skillfully, deliciously, generously, hospitably, proudly, widely, differently, interestingly, carefully, creatively, originally, innovatively, boldly, honestly.

“Adverbs are words that modify verbs, adjectives, or other adverbs. They often answer the questions how, when, where, why, or to what extent.”

(I’ve cut and pasted and reformatted the text here for the reader’s convenience. The words were listed in bullet points, separated by blank lines, such that a single screen shot didn’t pick them all up.)

ChatGPT’s claim of 20 adverbs is incorrect. Notice that ChatGPT listed seven “adverbs” that weren’t in the text at all! Those words are “deliciously”, “generously”, “hospitably”, “differently”, “interestingly”, “originally”, and “innovatively”. Interestingly, ChatGPT seemed to be generating these words from adjectives that were in the text. ChatGPT also completely missed “fairly” and “wisely”.

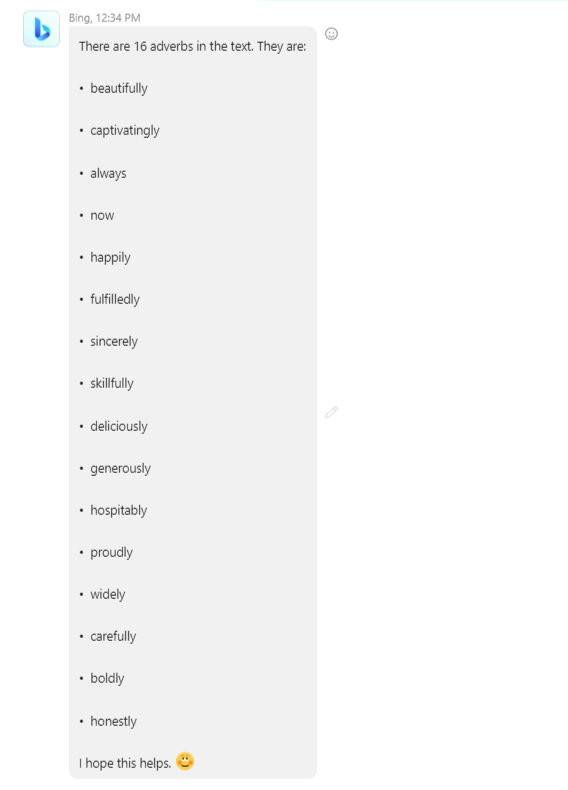

I repeated the experiment. This time ChatGPT reported 16 adverbs, adding “captivatingly” to the other two words made up from an existing adjective. And ChatGPT once again missed “fairly” and “wisely”. Over the next several prompts, I pointed out ChatGPT’s errors until eventually it got things right, and provided me with correct list.

Next, I pasted in ChatGPT’s own original text, and gave it this prompt: “Count the adverbs in this text. Be aware that it might have changed from the text you’ve been working with.”

This time it made up a new adverb from a verb (“fulfilledly”), and again included “deliciously” and “generously”.

So: ChatGPT doesn’t get things right; it needs to be corrected; and when it’s corrected, it doesn’t learn. It gives inconsistent results when given the same prompt. When asked to analyze the same data twice, makes new mistakes. It can’t be trusted to check its own work.

That sounds bad, but we should anticipate and tolerate it, since ChatGPT is not designed to perform analysis or computation. It’s designed to produce somewhat randomized text that affords the impression that it can reason. And that’s not a problem as long as we don’t depend on its capacity to reason. It can even be a feature.

ChatGPT is like a mentalist doing a cold reading. Mentalists exploit our desire and our capacity to make sense of things. We ascribe our own meanings to the oblique, incomplete, allusive, ambiguous things that mentalists say, and we fill in the blanks. When this happens in just the right way, we get excited by the hits, and we tend not to notice the misses.

The mentalist’s misses are okay when the misses don’t matter. That’s okay when we’re not putting our job choices, love life, inheritance, or company’s reputation at risk.

In that text up there, there are 15 adverbs in the text, not 14. ChatGPT identified one that I had missed the first time I counted. (Time-related adverbs seem to be the ones that I miss most easily.) ChatGPT can help me!

But can help me doesn’t mean does help me or will help me, since help includes questions of cost, value, and risk. The cost of this exercise was significant. It took me about an hour to take on this exercise, to perform some supplementary experiments, and to write all this up. There was some value, but that value was pretty limited. Was it worth my time? Hard to say.

The big deal, to me, is the risk: ChatGPT is designed to vary its answers, which means that in some critical respects it is unreliable by design.

When we’re working with something important, that unreliability requires responsibility on the part of the user: constant attentiveness, supervision, curatorship. All that requires a significant investment of time — which represents opportunity cost against all the other approaches and tools that we could apply. It behooves us all to think well and choose wisely.