An article that I was reading this morning was accompanied by a stock photo with an intriguing building in the background.

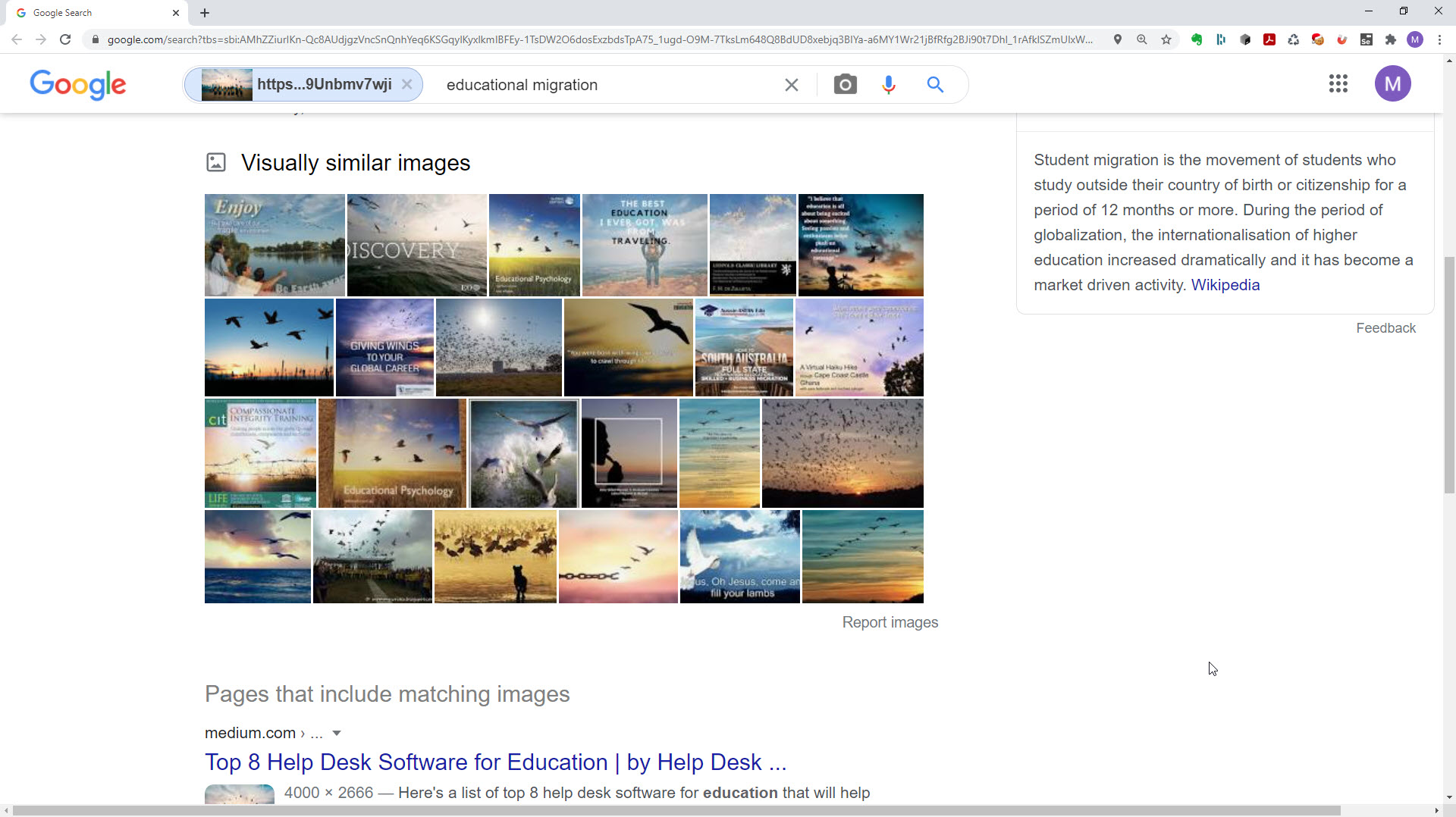

I wanted to know where the building was, and what it was. I thought that maybe Chrome’s “Search Google for image” feature could help to locate an instance of the photo where the building was identified. That didn’t happen, but I got something else instead.

Google Images provided me with a reminder that “machine learning” doesn’t see things and make sense of them; it matches patterns of bits to other patterns of bits. A bunch of blobby things in a variegated field? Birds in the sky, then—and the fact that there are students in their graduation gowns just below doesn’t influence that interpretation.

That reminded me of this talk by Martin Krafft:

The MIT network’s concept of a tree (called a symbol) does not extend beyond its visual features. This network has never climbed a tree or heard a branch break. It has never seen a tree sway in the wind. It doesn’t know that a tree has roots, nor that it converts carbon dioxide into oxygen. It doesn’t know that trees can’t move, and that when the leaves have fallen off in winter, it won’t recognize the tree as the same one because it cannot conclude that the tree is still in the same position and therefore must be the same tree.”

Martin Krafft, The Robots Won’t Take Away Our Jobs: Let’s Reframe the Debate on Artificial Intelligence, 14:30</p>

Then I had another idea: what if I fed a URL to the image above to Google Images? This is what I got:

Software and machinery assist us in many ways as we’re organizing and sifting and sorting and processing data. That’s cool. When it comes to making sense of the world, drawing inferences, and making decisions that matter to people, we must continue to regard the machinery as cognitively and socially oblivious. Whether we’re processing loan applications, driving cars, or testing software, machinery can help us, but responsible, socially aware humans must remain in charge.

(A couple of friendly correspondents on Twitter have noted that the building is the Marina Bay Sands resort in Singapore.)

[…] Bug of the Day: AI Sees Bits, Not Things Written by: Michael Bolton […]

It seems (from the article and a little more testing of my own) that the image search results are highly influenced by the associated text (“educational migration” in your first screenshot). Which suddenly made all the bird pictures make more sense to me, since it seems to also be picking up on the word “migration”.

Michael replies: Yes; you’re entirely right. I noticed that too, but for brevity’s sake didn’t comment on it. It would be really interesting to dive deeper into that aspect of Google Image’s behaviour, which…

Also, I thought it was worth pointing out that the screenshot for the URL search doesn’t appear to show an actual a Google Image search–the “search by image” link just above the results (which seemed to be heavily influenced by the words “develop” and “sense” 🙂 ) took me to an image search with the text “study abroad” next to the image instead of “educational migration”, and gave mostly results of people standing side by side with their arms in the air. For good measure I tried changing that text to a few things, and “building in background” actually did give one other result that included what appears to be the same building.

…you did. Thank you!

All the same, the points made are as relevant as always. Cheers!

Yes its not totally dependable as of now..

But you will agree its good and would be better in future.

Michael replies: No, I won’t agree to that.

It’s not helpful or useful to say AI is good (or bad) without context. It’s a fact (and it is a fact) that AI recognizes bits and strings, and not things in the world. It’s a fact that, for the foreseeable future, that is all that it will recognize.

That doesn’t constitute good or bad. In circumstances where matching patterns of bits to other patterns is all we need to accomplish a task, then AI might be good. In cases where interpretation of those patterns matters, then AI is almost certainly not good, not dependable. It’s not even close to being dependable.

Is a hammer good? It’s great for hammering, but it makes a pretty terrible text processor. What makes any technology good or bad in context is a combination of that technology’s capabilities and its relationships to the human purposes that people intend it to support.

[…] Now, to be sure, I do still think there is a bit of a false dichotomy that testers reinforce. But there’s a wider point here: I test my own thinking routinely. If I was a machine — say, an AI — I would essentially be an algorithm. I would not be testing my own thinking. I would not be exploring how I view my discipline and how I practice it. I would see the bits and pieces and not the whole picture as AI does (and as Michael Bolton states in Bug of the Day: AI Sees Bits, Not Things). […]