Last week on Twitter, I posted this:

“The testing is going well.” Does this mean the product is in good shape, or that we’re obtaining good coverage, or finding lots of bugs? “The testing is going badly.” The product is in good shape? Testing is blocked? We’re noting lots of bugs erroneously?

— Michael Bolton (@michaelbolton) January 31, 2018

“The testing is going well.” Does this mean the product is in good shape, or that we’re obtaining good coverage, or finding lots of bugs?

“The testing is going badly.” What does that mean? That product is in bad shape? (Note the error in the original tweet!) Testing is blocked? We’re noting lots of bugs erroneously?

People replied offering their interpretations. That wasn’t too surprising. Their interpretations differed; that wasn’t too surprising either. I was more surprised at how many people seemed to believe that there was a single basis on which we could say “the testing is going well” or “the testing is going badly”—along with the implicit assumption that people would automatically understand the answer.

Testing could be going well in one sense but badly in another. Maybe the testing work is going smoothly and straightforwardly, but there are lots of problems in the product. Perhaps the product looks pretty good on the whole, so far, but the testing work is slow and hard because we don’t have good access to the developers.

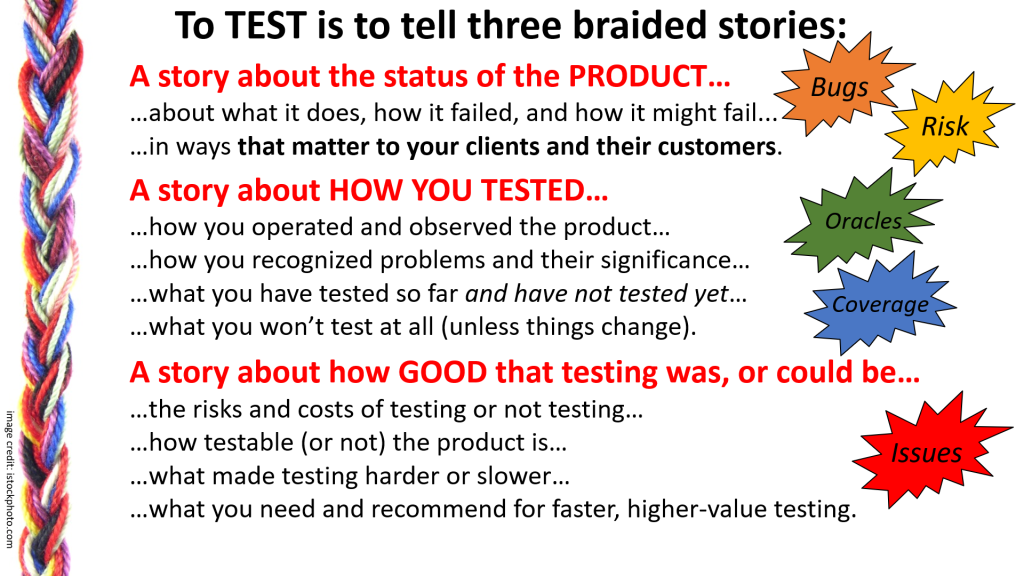

To test is—among many other things—to construct, edit, narrate, and justify a story. Like any really good story, a testing story involves more than a single subject. A excellent, expert testing story has at least three significant elements, three plot lines that weave around each other like a braid. Miss one of those elements, and the chance of misinterpretation skyrockets. I’ve talked about this before, but it seems it’s time for a reminder.

In Rapid Software Testing, we emphasize the importance of a testing story with three strands, each of which is its own story.

We must tell a story about the product and its status. As we have tested, we have learned things about the product: what it is, what it does, how it works, how it doesn’t work, and how it might not work in ways that matter to our various clients. The overarching reason that most clients hire testers is to learn about problems that threaten the value of the product, so bugs—actual, manifest problems—tend to lead in the product story.

Risks—unobserved but potential problems—figure prominently in the product story too. From a social perspective, good news about the product is easier to deliver, and it does figure in a well-rounded report about the product’s state. But it’s the bad news—and the potential for more of it—that requires management attention.

We must tell a story about the testing. If we want management to trust us, our product story needs a warrant. Our product story becomes justified and is more trustworthy when we can describe how we configured, operated, observed, and evaluated the product. Part of this second strand of the testing story involves describing the ways in which we recognized problems; our oracles. Another part of this strand involves where we looked for problems; our coverage.

It’s important to talk about what we’ve covered with our testing. It may be far more important to talk about what we haven’t covered yet, or won’t cover at all unless something changes. Uncovered areas of the test space may conceal bugs and risks worse than any we’ve encountered so far.

Since we have limited time and resources, we must make choices about what to test. It’s our responsibility to make sure that our clients are aware of those choices, how we’re making them, and why we’re making them. We must highlight potentially important testing that hasn’t been done. When we do that, our clients can make informed decisions about the risks of leaving areas of the product untested—or provide the direction and resources to make sure that they do get tested.

We must tell a story about how good the testing is. If the second strand of the testing story supports the first, this third strand supports the second. Here it’s our job to describe why our testing is the most fabulous testing we could possibly do—or to the degree that it isn’t, why it isn’t, and what we need or recommend to make it better.

In particular, we must describe the issues that present obstacles to the fastest, least expensive, most powerful testing we can do. In the Rapid Software Testing namespace, a bug is a problem that threatens the value of the product; an issue is a problem that threatens the value of the testing. (Some people say “issue” for what we mean by “bug”, and “concern” for what we mean by “issue”. The labels don’t matter much, as long as people recognize that there may be problems that get in the way of testing, and bring them to management’s attention.)

A key element in this third strand of the testing story is testability. Anything that makes testing harder, slower, or weaker gives bugs more time and more opportunity to survive undetected. Managers need to know about problems that impede testing, and must make management decisions to address them. As testers, we’re obliged to help managers make informed decisions.

On an unhappy day, some time in the future, when a manager asks “Why didn’t you find that bug?”, I want to be able to provide a reasonable response. For one thing, it’s not only that I didn’t notice the bug; no one on the team noticed the bug. For another, I want to be able to remind the manager that, during development, we all did our best and that we collaboratively decided where to direct our attention in testing and testability. Without talking about testing-related issues during development, those decisions will be poorly informed. And if we missed bugs, I want to make sure that we learn from whatever mistakes we’ve made. Allowing issues to remain hidden might be one of those mistakes.

In my experience, testers tend to recognize the importance of the first strand—reporting on the status of the product. It’s not often that I see testers who are good at the second strand—modeling and describing their coverage. Worse, I almost never encounter test reports in which testers describe what hasn’t been covered yet or will not be covered at all; important testing not (yet) done.

As for the third strand, it seems to me that testers are pretty good at reporting problems that threaten the value of the testing work to each other. They’re not so good, alas, at reporting those problems to managers. Testers also aren’t necessarily so good at connecting problems with the testing to the risk that we’ll miss important problems in the product.

Managers: when you want a report from a tester and don’t want to be misled, ask about all three parts of the story. “How’s the product doing?” “How do you know? What have you covered, and what important testing hasn’t been done yet?” “Why should we be happy with the testing work? Why should we be concerned? What’s getting in the way of your doing the best testing you possibly could? How can we make the testing go faster, more easily, more comprehensively?”

Testers: when people ask “How is the testing going?”, they may be asking about any of the three strands in the testing story. When we don’t specify what we’re talking about, and reply with vague answers like “the testing is going well”, “the testing is going badly”, the person asking may apply the answer to the status of the product, the test coverage, or the quality of the testing work. The report that they hear may not be the report that we intended to deliver. To be safe, even when you answer briefly, make sure to provide a reply that touches on all three strands of the testing story.

[…] How is the testing going? – Michael Bolton – http://www.developsense.com/blog/2018/02/how-is-the-testing-going/ […]

[…] Michael Bolton tells us how to report progress on testing, as a story woven of three strands. 5 minutes to read. […]

[…] How is the testing going? Written by: Michael Bolton […]

[…] we learned, how we carried out the testing and how good (or not) the testing was. As my colleague Michael Bolton says (and from whom I learned of The Golem) — “To test is… to construct, edit, narrate and […]

Hello, Michael!

Can you please explain, how can we scale this idea to Scrum? For example, our sprints lasts two weeks. Every tester can test from 5 to 12 features, so in average testing takes two days for one feature.

Michael replies: You can scale a three-part testing story up to an exhaustive report at the end of a project, to a two-minute summary at a sprint retrospective, or down to a few sentences in a morning standup.

In our teams we always communicate with stakeholders and teammates (if we need it).

The parenthetical confuses me a little. I can’t think of a circumstance in which you don’t need to communicate with stakeholders and teammates to some degree.

We have a Walkthrough stage, where we can discuss with stakeholders, did we understand everything right and that we moving in the right direction.

We can always ask BA’s about requirements and developers about current implementation and what can be affected and what else should we test.

Also, daily standups helps all teammates to understand current status of developing and testing.

So, we have answers for all these questions: “Does this mean the product is in good shape, or that we’re obtaining good coverage, or finding lots of bugs? “The testing is going badly.” The product is in good shape? Testing is blocked? We’re noting lots of bugs erroneously?” everyday on a standup.

That’s not all, I’d say.

On my past place of work we used Waterfall model and this really suitable there. To prepare large test summary report at the end of milestone and to have some intermediate reports. So, all stakeholders understand how testing is going.

Again, as long as you can summarize, the three-part testing story scales. You don’t have to tell it in a specific order. In a daily standup meeting, you could easily say something like this:

“We’ve been doing basic functionality testing on the login feature. We found a bug where certain roles weren’t getting the right permissions, and another one where the timestamps referred to the wrong time zone. We intend to do load and stress testing later this week, but without access to the staging environment—which is down right now—we won’t be able to do it, so we’ll need someone’s help on that. Any questions?”

It seems to me that that hits all three strands in the braid. If you have bigger concerns than that, you can add “And I’d like to provide a more detailed report to you, Dear Product Owner, after the standup.” If development work and the talk about it can scale, so can testing work and the talk about it.

Hello, Michael!

Thanks for you answers.

>The parenthetical confuses me a little. I can’t think of a circumstance in which you don’t need to communicate with stakeholders and teammates to some degree.

Actually I meant that we don’t need always to communicate with stakeholders between grooming and walkthrough session. Only in case when something unclear or any new circumstances.

>Michael replies: You can scale a three-part testing story up to an exhaustive report at the end of a project, a two-minute summary at a sprint retrospective, or down a few sentences in a morning standup.

Does that mean that actually we don’t need to prepare big Test Summary Report for every feature in sprint? When I said big, I actually mean something like: http://www.ufjf.br/eduardo_barrere/files/2011/06/SQETestSummaryReportTemplate.pdf (or similar). Where we describe what was tested, how it was tested. What we didn’t test and why and so on.

Michael replies: I can’t tell you what is and is not appropriate in your context. I don’t know you, and I don’t know your business. It’s worth noting: neither does the IEEE. What you must produce is not up to anyone to decide other than you or your clients.

I am asking it because now we discussing with QA teammates what artifacts we need to prepare for each tested feature (because different POs needs different artifacts, some of PO needs report as above and QA prepares it and have low velocity and from my point of view these ideas haven’t anything in common with Rapid Testing).

What you can advise us? How can we reduce number of reports (we already have TestRail and can export test runs results)? How can we find that will suitable for all?

Do exactly what you’re doing now. Talk about it. Experiment with it. See how people feel about the results. Adjust things to keep people satisfied. My personal bias in project work is to emphasize face-to-face conversation, chat, and discussion; to summarize; to avoid producing documentation that no one will read.

That’s why I am asking about how can we scale it to SCRUM. And as I understand you, we actually don’t need such big reports for each feature, it will be redundantly (but it is very good idea to prepare such report at the end of project/milestone, and some intermediate reports at retro each two weeks).

That sounds good to me. But I don’t matter. You and your team decide what you want for yourselves and for each other.

One important note. Half of the team are distributed. But PO who need big reports for every feature works in the same office with his QA who prepare such reports for him. My PO don’t need such reports, but he in Australia and I in Russia. He fully rely on my testing.

If you and your client are happy, everything’s fine—right?

[…] rate. But you don’t need those numbers to start the conversation. Michael Bolton writes in this blog post, the question “How is the testing going?” requires an answer with three strands: a […]

[…] Forrás: How Is The Testing Going?Szerz?: Michael Bolton […]

I found it interesting when you said testing a product helps you learn about it and its problems. In my opinion, when you’re developing a product, you should definitely test it to learn how it works. Plus, in that way, you’ll learn about how its performance is doing. I think you did a great job explaining the benefits of testing a product.