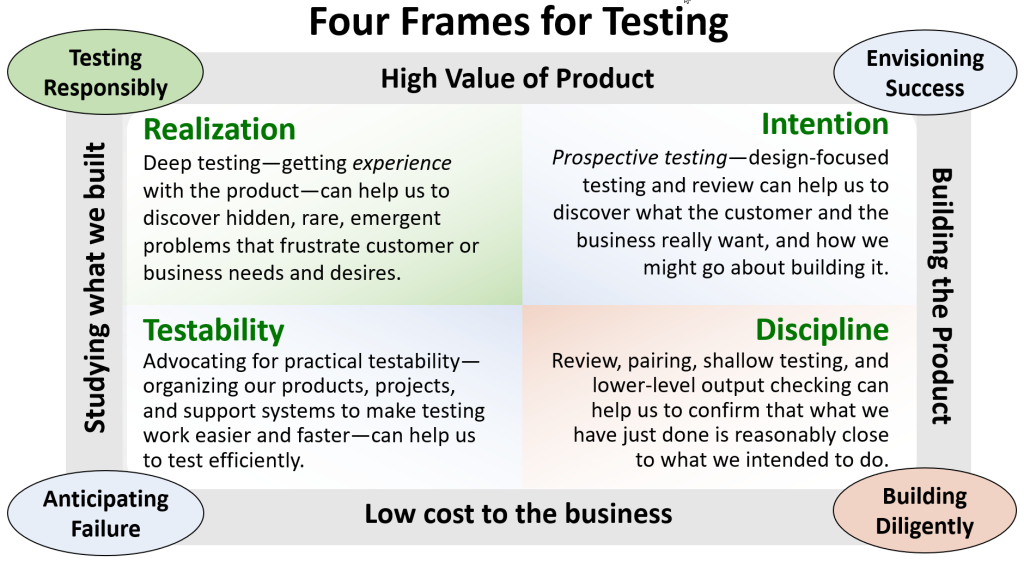

The previous post in this series provided a detailed description of testing framed in terms of Intention, Discipline, Testability, and Realization:

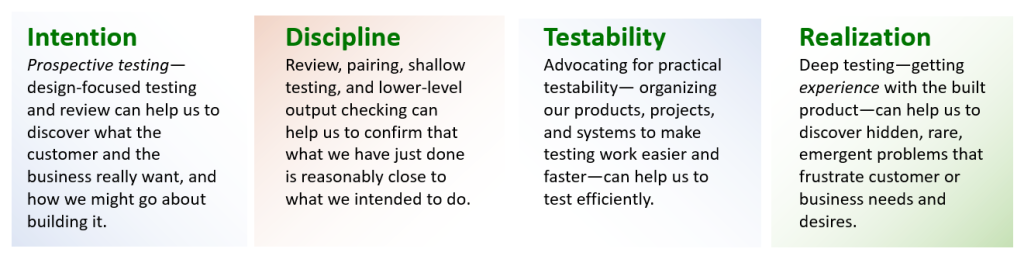

It might be tempting to unroll these frames by starting in the top right, and rearranging them in a nice, tidy, linear sequence:

Although it’s not the way people usually talk about it, you could think of this as a kind of end-to-end testing. Most of the time, so it seems, people think of end-to-end testing of a product or system in terms of an explicit workflow, or a business process, or a transaction. When we take an expansive notion of products — the one I’ve been referring to all along in this series (and I’m about to do it again) — every product is worthy of some degree of end-to-end testing in at least three dimensions.

One dimension is framed by product’s role in the system of which it’s an element. For an API endpoint, for instance, an end-to-end test can be one that starts with the intention for a task to be accomplished, a set of preconditions for the request to be made, and the data that some person or process prepares for the request; and that ends when the response is supplied to the requestor, when the transaction is logged, and when any memory used in the transaction is freed.

Another dimension of end-to-end testing is framed by the lifespan of product, or feature, or component: the intentions that people have for it, the effort that goes into building it, the supporting, and the testing that people do when the product has been realized — and maybe for quite a while afterwards, too.

Testing and Development Are Non-Linear

Jerry Weinberg observed that any line can look like a straight line if you look at a small enough part of it. That’s true if you look at it from far enough away, too. The Waterfall model — and the V-model that shorehorns testing onto it — might hold a lot of appeal for people who don’t look too closely at what happens in the course of developing software.

I referred to “the entire system” just above, but once again (I warned you I would do this!) note that “the entire system” or “the built product” doesn’t necessarily refer to a complete, running software application. Instead, it refers to the highest level of whatever we happen to be working with: a completed component; an entire requirements document; a fully-formed idea; an explicit idea that might require more development; a user story; an agenda for a sprint; a one-line bug fix… Everything we build or do, at every scale, and to some degree of thoroughness, can get tested end-to-end.

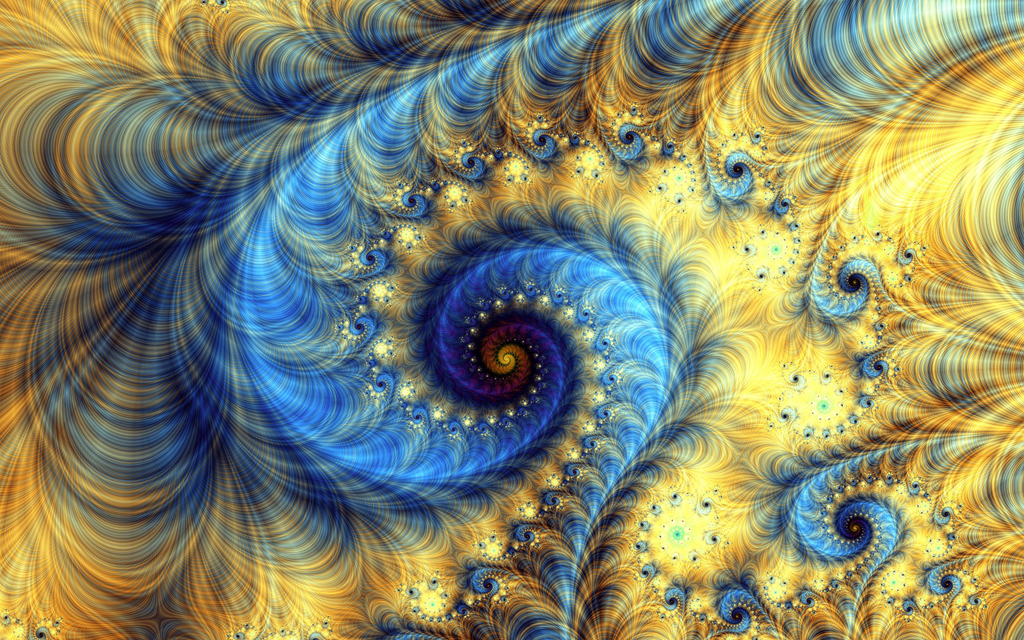

Developing a product that gets built and released to customers involves building a lot of stuff that comprises it and supports it. Each full-blown product consists of dozens, hundreds of other things that people have produced. Software development isn’t linear. It’s not even just loopy. it’s a fractal, self-similar all the way down and all the way up. The same goes for testing. Whatever we’re making, or writing, or building, we can apply testing activity from each of the four frames, at any level, at any time.

All of the things that people produce can be tested, all the way along, through the lenses of the four frames.

As we design, build, and test a feature,

- We can examine and explore ideas, requirements, and designs, and perform prospective thought experiments on them. (Intention)

- We can check each unit and component of our work in quick, non-disruptive, and disciplined ways as we go, to make sure that what we’re building is reasonably close to what we intended. (Discipline)

- We can review and evaluate the product and the project landscape to identify things that might make testing harder or slower. (Testability)

- Once the feature is built, we can test it thoroughly, making it interact with the rest of the system, getting experience with it and performing experiments on it to discover elusive problems. (Realization)

Some might complain about the idea of deep testing following building. Those who do seem to want to address the issue in at least one of two ways:

1) Insist that all of the development work on the existing build of the feature should be done before moving on to the next build of the same feature. That sounds like a good idea until developers and managers chafe at having to do that testing, or waiting for it to be done, or both.

2) Insist that there’s simply no time for experiencing, exploring, or experimenting with the built product; therefore, eliminate that work, roll out the feature cautiously and conservatively, and trust that automated checks — mostly from the Intention and Discipline frames — will be sufficient to cover the risk of elusive and important problems. If there are such problems, roll back the feature, diagnose it, and put out a hot fix.

On the face of it, this doesn’t seem like a terrible idea to many people, and where risk and scale are relatively low, it might be just fine. Still, it’s worth considering that some problems are consequential, and rolling back might be tricky. Oh, it can be straightforward to a roll back to an older build of the code; with good version control, that’s relatively easy. But it’s not so easy to roll back time. A sufficiently bad bug might lose or corrupt data, interrupt customers’ work, enable a security breach… Making good on those things can be difficult and expensive.

But there is a third way, and it can be combined with the options (especially Option 2) above:

3) Do fast, efficient, and relative deep Realization-framed testing on each build, in parallel with development, as the feature is being refined incrementally.

The third approach affords opportunities for deep testing that doesn’t interrupt the developers’ flow — which allows the developers to continue making steady forward progress. When intentions are clear, when the developers are diligent (performing lots of testing in the Discpline frame), and when practical testability is high, deep testing can be done efficiently, and the risk of developers getting too far ahead of testing on built versions of the product can be reduced.

Using the Frames to Evaluate Our Work

There’s yet another fractal dimension of testing; a self-referential one. We can examine not only the product, but our own work.

Here’s an example. Suppose that we’re doing Intention-framed testing work, analyzing a set of user needs and desires, with the goal of creating a diagram that represents structural elements of part of a product, so that we can build it and develop test ideas for it.

- The first step is to get straight on our intentions: What is this diagram going to look like? What specifically is it intended to represent? Who is going to be using the diagram? Who else might be using it? What might each of these parties want from it? What could go wrong; how might the diagram be incomplete or misleading? How would we know about that as early as possible? What we need to do to declare ourselves happy with the finished diagram?

- Then, discipline. Someone is drawing the diagram. We want them to get the drafting of it done quickly and efficiently, and we want them to have quick feedback — yet we don’t want to interrupt or pester or otherwise disrupt them. So, are the right people involved—and not too many of them? Are they checking their work against the intentions for it? Are they using the appropriate tools? Are they saving drafts where it’s useful and necessary, so they don’t lose work?

- Testability: as we move towards finishing and publishing the diagram, how should we prepare to test it — to perform a strong, deep critique of it? What is the gap between what we know and what we need to know? Are the right people qualified and ready to review it? Do they have what they need?

- Realization: Now that we’ve got a diagram that we believe to be complete, it’s time to review it, and contsider trying it out with some of the people who represent its users. Are we done yet? Does the diagram represent all the things we intended it to? What problems might be remaining in it? What are the other interpretations that it might afford? What might we have missed? Is it useful? Will people be able to apply it for building the product and developing test ideas for it? Whose perspectives might we have forgotten? What’s the risk of someone misinterpreting it, and how might they do that? Now are we done yet?

Now, all this might seem overwhelming, but it need not be. Pretty much all of these questions can be considered and answered instantly, in parallel with the work of creating the diagram, during the same working sessions and conversations in which we’re making it. The idea is not to create a ponderous review process. On the contrary; the idea is to train our minds to raise these questions and deal with them continously as we go.

By fostering a questioning culture that runs in parallel with the making of a product (and, in this case, developing an artifact that represents our intentions for it), we can speed up development by identifying problems that might otherwise get buried—and become more costly later on.

So far in this series, I’ve mostly been agnostic about who does the the testing work. That’s the subject for the next installment.

Hi Michael,

I am really enjoying this series, and love the example of the diagram above. As part of internal training delivery, I have often taken people through modeling courses, and always include a “what was the intention of the person producing this model, and how might those intentions have influenced the choices they made when producing the model” question in the heuristics I suggest for evaluating a model. It can generate some pretty good conversations